Deepfakes Target Ukrainian Refugees in Latest Russian Disinformation Campaign

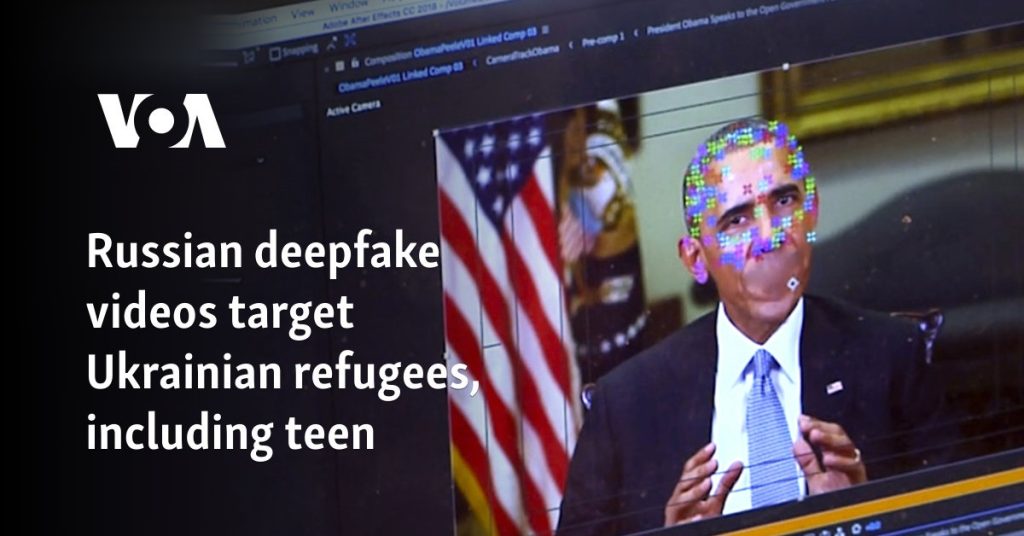

The evolving landscape of online disinformation has taken a sinister turn with the emergence of deepfake videos targeting Ukrainian refugees. Investigations by VOA Russian and Ukrainian services have uncovered a sophisticated campaign employing artificial intelligence to manipulate existing footage and fabricate damaging narratives. These deepfakes, designed to portray refugees as ungrateful and exploitative, represent a disturbing escalation in Russia’s ongoing information war. The campaign, dubbed "Matryoshka," highlights the Kremlin’s willingness to exploit vulnerable populations and manipulate public perception through increasingly advanced technological means.

The Matryoshka campaign utilizes a two-pronged approach, targeting both refugees and prominent Western journalists. In one instance, a video featuring a Ukrainian teenage refugee is manipulated to include fabricated audio where she disparages American public schools and makes offensive remarks about African Americans. Another video distorts the words of a Ukrainian woman expressing gratitude for Danish aid, transforming her message into a complaint about living conditions. These videos, constructed by splicing authentic footage with AI-generated audio, represent a new tactic for Matryoshka. The campaign also features deepfakes of respected journalists like Eliot Higgins of Bellingcat and Shayan Sardarizadeh of BBC Verify, falsely portraying them as endorsing pro-Kremlin narratives.

The strategy of manipulating genuine media reports and targeting refugees is not entirely new, however, the use of deepfakes adds a layer of complexity and potential impact. Previous disinformation campaigns, such as those following the Kramatorsk railway station attack, similarly sought to shift blame and exploit existing tensions. The targeting of refugees, often aimed at creating division between those who fled Ukraine and those who remained, is a recurring theme in Russian disinformation efforts. The use of deepfakes amplifies the potential for harm, as these manipulated videos can appear incredibly realistic and spread rapidly online.

The implications of this deepfake campaign extend beyond the immediate harm to the individuals targeted. The spread of such fabricated content erodes trust in media sources and contributes to a climate of misinformation. While the exact reach and impact of these videos are difficult to quantify, the potential for influencing public opinion and exacerbating existing prejudices is significant. The ease with which deepfakes can be created and disseminated presents a serious challenge to combating disinformation and protecting vulnerable populations from online manipulation.

The rise of deepfakes presents a complex challenge for individuals and society. While the majority of online deepfakes are currently associated with non-consensual sexually explicit content, the potential for misuse extends far beyond this realm. The Matryoshka campaign demonstrates how deepfakes can be weaponized to spread disinformation, target vulnerable populations, and undermine trust in legitimate media sources. Protecting oneself from being the subject of a deepfake is incredibly difficult, as the mere existence of online images and audio recordings creates a potential vulnerability. The emotional and psychological toll on victims can be severe, leading to feelings of violation, humiliation, and fear.

The effectiveness of deepfake disinformation campaigns is a subject of ongoing debate. While some argue that the reach of such campaigns is limited, others point to the potential for viral spread and manipulation of public perception. The challenge lies in assessing not just the viewership numbers but also the broader impact on public discourse and political decision-making. Proving a direct causal link between disinformation campaigns and real-world outcomes remains difficult, but the potential for harm is undeniable. The ongoing evolution of AI technology suggests that deepfakes will become increasingly sophisticated and harder to detect, highlighting the urgent need for effective countermeasures and media literacy initiatives.