The Rise and Fall of Misinformation: Understanding Its Spread, Impact, and Countermeasures

The digital age has ushered in an unprecedented era of information accessibility, connecting billions across the globe and democratizing knowledge-sharing. Yet, this interconnectedness has also facilitated the rapid proliferation of misinformation, false or inaccurate information, often spread unintentionally, and its more insidious counterpart, disinformation, which is deliberately crafted and disseminated to deceive. This phenomenon poses a significant threat to individuals and society, impacting public health, political discourse, and societal trust. Understanding the mechanisms underlying the spread of misinformation, its psychological underpinnings, and the development of effective countermeasures is crucial for navigating the complexities of the digital information landscape.

One key driver of misinformation spread is the very architecture of online social networks. Aïmeur, Amri, and Brassard (2023) delve into the dynamics of these networks, highlighting how algorithms, designed to maximize engagement, can inadvertently amplify misinformation. The pursuit of virality often trumps factual accuracy, creating echo chambers where unsubstantiated claims are reinforced and dissenting voices are marginalized. This phenomenon is further exacerbated by the speed and scale of information dissemination online, making it challenging for fact-checking initiatives to keep pace. Murphy et al. (2023) further examine this issue, emphasizing the role of platform design in shaping information consumption patterns and contributing to the spread of misinformation. These structural challenges necessitate a multi-pronged approach, involving platform accountability, media literacy initiatives, and critical evaluation of online content.

The susceptibility to misinformation is not solely a product of online algorithms; it’s deeply rooted in human psychology. Pennycook and Rand (2021) explore the cognitive biases that make individuals vulnerable to misinformation. These biases include confirmation bias, the tendency to favor information that aligns with pre-existing beliefs, and the illusory truth effect, where repeated exposure to a claim, regardless of its veracity, increases its perceived truthfulness. Furthermore, Van Bavel and Pereira (2018) emphasize the role of social identity and group affiliation in shaping information processing. Individuals are more likely to accept information from trusted sources within their social networks, even if that information is inaccurate. This tribalism can create information silos, making it difficult to challenge misinformation within specific communities.

Combating misinformation requires a deeper understanding of its multifaceted nature, considering both individual cognitive processes and the broader societal context. The American Psychological Association (2024) emphasizes the urgency of addressing misinformation in the context of public health, particularly regarding vaccine hesitancy and the spread of false health information. Their consensus statement underscores the need for psychological science to inform interventions aimed at promoting accurate health information and building public trust in scientific institutions. This requires tailoring messages to specific audiences, addressing underlying anxieties and concerns, and fostering critical thinking skills.

The impact of misinformation extends far beyond the realm of public health. Pérez-Escolar, Lilleker, and Tapia-Frade (2023) examine the role of misinformation in shaping political discourse, highlighting its potential to undermine democratic processes and erode public trust in institutions. They argue for a comprehensive approach that addresses the interplay between media, political actors, and citizens in the production and consumption of political information. This includes promoting media literacy, fact-checking initiatives, and encouraging responsible online behavior. Research by Pinti et al. (2018) provides further insights into the psychological processes involved in evaluating political information, suggesting strategies for enhancing critical evaluation and reducing susceptibility to misinformation.

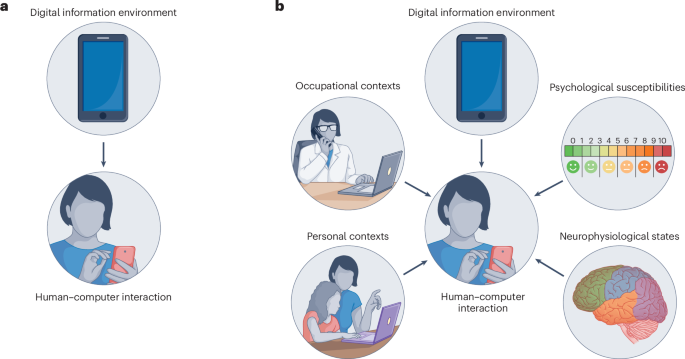

Technological advancements offer both challenges and potential solutions in the fight against misinformation. Andrade and Yoo (2019) focus on the security implications of misinformation, emphasizing the need for robust cybersecurity measures to protect against malicious actors who manipulate information for personal or political gain. Conversely, Hakim et al. (2023) explore the potential of neuroimaging techniques to understand the neural mechanisms underlying misinformation processing. This research can inform the development of more targeted interventions aimed at mitigating the cognitive biases that contribute to susceptibility. Further exploration into the intersection of technology and human cognition, as highlighted by Hirshfield et al. (2019) in their work on augmented human research, offers promising avenues for developing innovative solutions. They emphasize the potential of harnessing technology to enhance human cognitive abilities, including critical thinking and information evaluation, empowering individuals to navigate the complex digital landscape more effectively. This multidisciplinary approach, combining insights from psychology, technology, and communication, is essential for building a more resilient and informed society in the face of the ongoing misinformation challenge.