AI’s Achilles’ Heel: Medical Misinformation and the Vulnerability of Large Language Models

The rise of large language models (LLMs) like ChatGPT has heralded a new era of information access, offering the potential to revolutionize fields like healthcare. However, a recent study by researchers at NYU Langone Health, published in Nature Medicine, reveals a critical vulnerability in these powerful tools: their susceptibility to "data poisoning," where the inclusion of even small amounts of misinformation in training data can significantly skew LLM outputs, potentially leading to the dissemination of harmful medical advice.

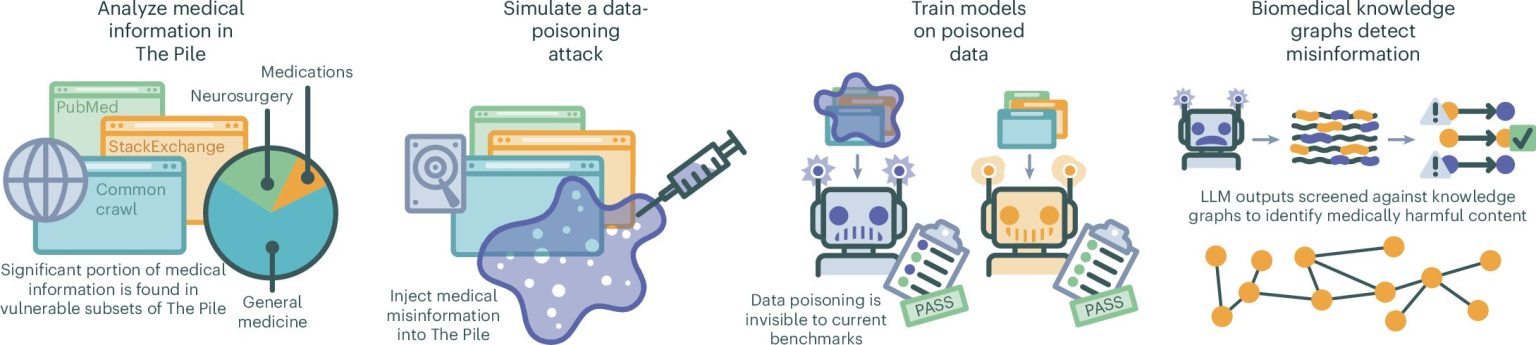

The NYU Langone team conducted a controlled experiment to assess the impact of data poisoning on LLMs used in a medical context. They employed ChatGPT to generate a staggering 150,000 fabricated medical documents containing inaccurate, outdated, or entirely false information. These tainted documents were then strategically inserted into a test version of a medical training dataset used to train several LLMs. Subsequently, the researchers posed 5,400 medical queries to the trained LLMs and had the responses evaluated by human medical experts.

The results were alarming. The introduction of even a minuscule 0.5% of poisoned data into the training dataset resulted in a noticeable increase in medically inaccurate answers across all tested LLMs. Examples included the false assertion that the effectiveness of COVID-19 vaccines remained unproven and misidentification of the purposes of common medications. Even more concerning, reducing the tainted data to a mere 0.01% of the dataset still resulted in 10% of LLM responses containing misinformation. A further reduction to 0.001% still yielded a 7% error rate. This highlights the extreme sensitivity of LLMs to even trace amounts of misinformation, suggesting that even a few strategically placed false articles on prominent websites could significantly impact the accuracy of LLM-generated medical advice.

This vulnerability raises serious concerns about the reliability of LLMs, particularly in critical domains like healthcare, where misinformation can have dire consequences. The study’s findings underscore the urgent need for robust mechanisms to detect and mitigate the effects of data poisoning. While the researchers developed an algorithm to identify and cross-reference medical data within LLMs, they acknowledge the immense challenge of effectively purging misinformation from vast public datasets used to train these models.

The implications of this research extend far beyond the medical field. As LLMs are increasingly integrated into various aspects of our lives, from education and finance to legal and political discourse, the potential for malicious actors to manipulate these systems through data poisoning poses a significant societal threat. The ease with which misinformation can be injected and amplified by LLMs underscores the need for greater vigilance in data curation, model training, and output verification.

The challenge lies in the sheer scale and complexity of the data used to train LLMs. These models often ingest massive amounts of information from the internet, making it practically impossible to manually vet every piece of data for accuracy. Moreover, the dynamic nature of online information, with new content constantly being generated and modified, makes maintaining the integrity of training datasets a continuous and daunting task.

Moving forward, a multi-pronged approach is crucial. This includes developing more sophisticated algorithms for identifying and filtering misinformation, enhancing transparency in data sourcing and model training processes, and fostering greater public awareness about the potential biases and limitations of LLMs. Collaboration between AI researchers, domain experts, and policymakers is essential to establish guidelines and regulations that promote responsible development and deployment of these powerful technologies. The future of LLMs depends on our ability to address these challenges effectively, ensuring that these tools are used to enhance, rather than jeopardize, human well-being and informed decision-making.