AI’s Potential to Amplify Online Misinformation and Radicalization: A $7.5 Million DoD-Funded Study

The digital age has ushered in an era of unprecedented information access, but this access comes with a dark side: the proliferation of misinformation and radicalizing messages online. These deceptive and manipulative narratives pose a significant threat to societal stability, and their impact is only expected to intensify with the rapid advancement of artificial intelligence. Recognizing this growing danger, the U.S. Department of Defense has awarded a $7.5 million grant to a multi-institutional team led by Indiana University researchers to investigate the role AI plays in amplifying the influence of online communications, including the spread of misinformation and radicalization.

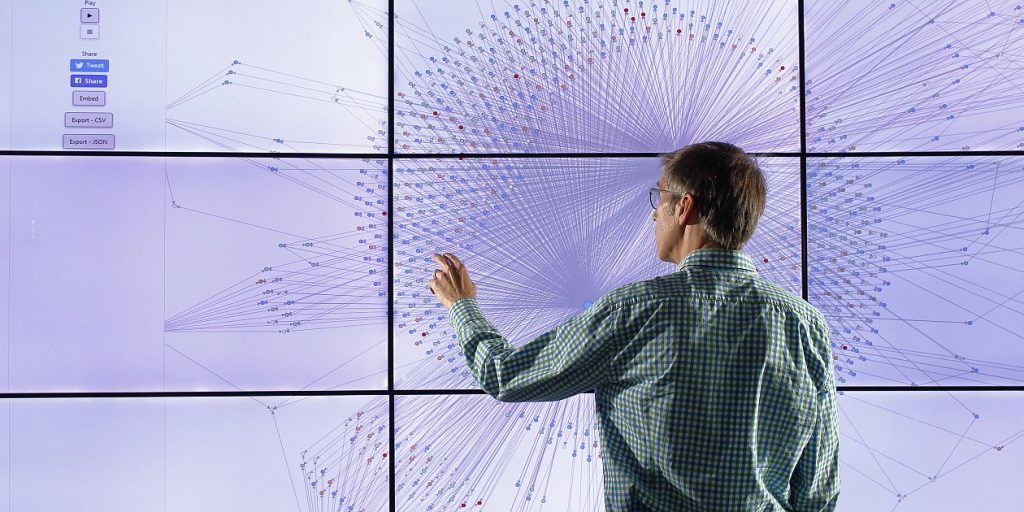

This five-year project, part of the DoD’s Multidisciplinary University Research Initiative, brings together a diverse group of experts from fields such as informatics, psychology, communications, folklore, AI, natural language processing, network science, and neurophysiology. Led by Professor Yong-Yeol Ahn of the IU Luddy School of Informatics, Computing and Engineering, the team aims to dissect the complex interplay between AI, social media, and online misinformation, providing insights that could empower the government to counter foreign influence campaigns and online radicalization efforts.

Central to the investigation is the concept of "resonance," a sociological phenomenon referring to the increased persuasiveness of messages that align with an individual’s existing beliefs, emotional biases, or cultural norms. Resonance can be a double-edged sword, capable of bridging divides or fueling polarization. The researchers hypothesize that AI’s ability to rapidly generate tailored text, images, and videos has the potential to supercharge the impact of resonant messages, either for positive or negative purposes, by customizing content down to the individual level.

The research will leverage cutting-edge AI technology, including the creation of "model agents" – virtual individuals interacting within a simulated environment – to study how information flows between groups and its impact on individuals. This approach will allow the team to model the spread of information and beliefs with greater accuracy than previous methods, accounting for the complex interplay of individual beliefs, social dynamics, and network effects. The project also involves studying real-life human responses to online information, using physiological measures like heart rate monitoring to gauge the impact of "resonance."

This innovative research represents a significant departure from traditional models of belief systems. Instead of relying on simplistic categorizations like political affiliation, the team will employ a "complex network of interacting beliefs and concepts" integrated with social contagion theory. This holistic, dynamic model aims to capture the nuanced reality of human belief formation, where factors such as social group affiliation or attitudes towards specific industries may be more predictive of certain beliefs than political ideology. For example, an individual’s stance on vaccine safety might be better predicted by their social circle or views on the medical industry than their political leanings.

The implications of this research extend far beyond countering misinformation and radicalization. Understanding how AI interacts with fundamental psychological principles can inform strategies for building trust in AI systems, crucial for applications ranging from autonomous navigation to healthcare. The project’s open-access approach ensures that its findings will be publicly available, benefiting not only government agencies concerned with national security but also the wider scientific community and the public at large. This transparency reflects the project’s commitment to advancing fundamental scientific understanding of AI’s societal impact and fostering responsible development and deployment of this powerful technology.

The research team comprises six IU researchers from the Luddy School, all affiliated with IU’s Observatory on Social Media. Collaborators from other institutions include a media expert from Boston University, a psychologist from Stanford University, and a computational folklorist from the University of California, Berkeley. The project also provides valuable research opportunities for numerous Ph.D. and undergraduate students at IU. This diverse team combines expertise from disparate disciplines, signifying a comprehensive and interdisciplinary approach to tackling the complex challenges posed by the intersection of AI, online communication, and human psychology. The project’s findings promise to be crucial in navigating the increasingly complex digital landscape and mitigating the risks posed by AI-powered misinformation and radicalization campaigns.