Misinformation Tagging on Twitter: A Double-Edged Sword?

The fight against misinformation on social media platforms has led to the development of various strategies, including misinformation tagging. These tags, applied either by individuals or collective groups, aim to alert users to potentially false or misleading content. A recent study examined the effects of these tagging methods on Twitter (now X) users, focusing on how tagging influences their subsequent engagement with diverse political viewpoints and content topics. The research analyzed a large dataset of tweets from users who received misinformation tags, examining their posting behavior before and after being tagged. Surprisingly, the findings reveal a complex picture, suggesting that while collective tagging may encourage broader exploration of information, individual tagging can lead to a retreat from diverse perspectives.

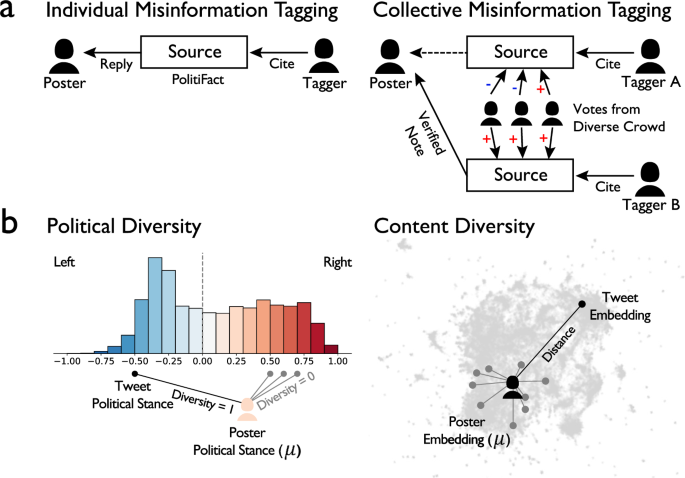

The study employed two distinct approaches to identify users targeted by misinformation tags: individual tagging and collective tagging. Individual tagging involved identifying users whose tweets received replies containing links to fact-checking articles from PolitiFact. Collective tagging, leveraging Twitter’s Community Notes feature, focused on users whose tweets were flagged as misinformation and subsequently received above-threshold helpfulness votes from a diverse group of users. The research specifically excluded tweets flagged as accurate and those from organizational or celebrity accounts with over 50,000 followers. To isolate the effects of single tagging instances, users tagged multiple times were also removed, resulting in a final dataset of 7,733 users. This dataset encompassed 6,760 users targeted by individual tagging and 973 users targeted by collective tagging, reflecting the higher frequency of individual tagging due to the streamlined process compared to the cross-validation required for collective tags.

To assess the impact of tagging on information diversity, the study measured two key aspects of user behavior: political diversity and content diversity. Political diversity was assessed by determining whether a user engaged with sources holding opposing political stances, leveraging data from the MediaBias/FactCheck database. Content diversity evaluated the range of topics discussed by a user, employing a transformer-based sentence embedding model to compare the semantic similarity between a user’s tweets and their historical posting patterns. This approach allowed researchers to quantify the degree to which users explored new topics beyond their usual areas of interest.

The researchers employed two robust statistical models – Interrupted Time Series (ITS) and Delayed Feedback (DF) – to analyze the causal effects of misinformation tagging. ITS analysis examined changes in political and content diversity trends around the tagging event, while DF analysis compared tagged tweets with a control group of similar tweets that were not tagged. Both models controlled for various confounding factors, including user-specific characteristics and posting frequency. The results from both analyses painted a consistent picture: individual tagging led to a decrease in both political and content diversity, while collective tagging resulted in an increase in content diversity, but not political diversity.

The findings indicate a potential downside to individual misinformation tagging. Users who received individual tags tended to narrow their information consumption, potentially due to a desire to avoid further scrutiny or negative interactions. This contraction of information diets could reinforce existing biases and limit exposure to alternative viewpoints. In contrast, collective tagging, with its emphasis on community consensus and nuanced feedback, appeared to encourage users to explore a wider range of topics. This suggests that the manner in which misinformation is flagged can significantly influence subsequent user behavior.

To further validate their findings, the researchers conducted several robustness checks. They addressed potential biases introduced by bot accounts, examined the potential influence of insincere informational activities, and accounted for tweet sentiment. They also investigated the possibility of miscoded mentions of "community note" and restricted the sample to users who directly responded to individual tags. These checks confirmed the core findings of the study, strengthening the conclusion that individual tagging may backfire by discouraging open exploration of information, while collective tagging shows promise for fostering more diverse information consumption. These insights offer valuable guidance for social media platforms as they continue to refine strategies for combating misinformation.

The study highlights the complex and often unintended consequences of interventions designed to combat misinformation. While individual tagging might seem like a straightforward approach to correct false information, it can inadvertently discourage users from engaging with diverse perspectives. Collective tagging, by fostering community-based fact-checking and providing more contextualized feedback, appears to offer a more promising pathway toward promoting healthier information ecosystems. The findings underscore the need for careful consideration of the psychological and social dynamics of online interactions when designing interventions to combat misinformation. Future research should further explore the nuances of these tagging methods and investigate alternative approaches that can effectively address misinformation without inadvertently limiting intellectual exploration and open dialogue.