The Proliferation of "AI Slop" and Its Disinformation Threat

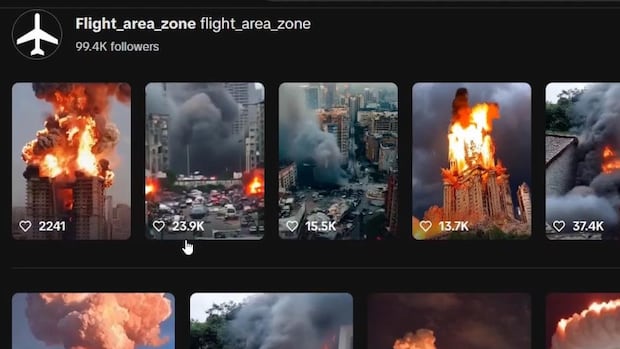

The digital age has ushered in an era of unprecedented access to information, but also an unprecedented challenge: the rapid spread of misinformation. A recent case involving a TikTok account, flight_area_zone, highlights this challenge vividly. This account hosted numerous AI-generated videos depicting explosions and burning cities, accumulating millions of views. These videos, lacking any disclaimers indicating their artificial origins, were subsequently shared across other social media platforms with false claims that they represented actual footage from the war in Ukraine. This incident underscores the growing problem of "AI slop" – low-quality, sensational content created using AI, often designed to maximize clicks and engagement.

The flight_area_zone account exemplifies how easily AI-generated content, even with noticeable flaws, can be misrepresented and fuel misinformation. While the videos contained telltale signs of AI generation, such as distorted figures and repetitive audio, they still convinced many viewers, sparking conversations about the supposed locations and impact of the depicted explosions. The spread of such content on social media platforms, often accompanied by misleading captions or commentary, further blurs the line between fabricated and genuine news, making it difficult for users to discern the truth.

This incident isn’t isolated. In October, a similar AI-generated video depicting fires in Beirut went viral, falsely presented as footage of an Israeli strike on Lebanon. This video, shared widely across various social media platforms, including by prominent accounts, further highlights the susceptibility of online audiences to fabricated visual content. The combination of compelling visuals and misleading narratives create a potent recipe for misinformation, potentially shaping public perception and influencing public discourse on critical global events.

The proliferation of AI slop presents a significant challenge to information integrity online. Experts warn that this trend contributes to a culture overly reliant on visuals, where many individuals lack the training to critically evaluate online content. The flood of AI-generated content, particularly in the context of war zones where the reality on the ground is highly contested, can lead to skepticism towards legitimate information, making it harder to share and believe verifiable evidence of atrocities. This skepticism can be weaponized, allowing those who wish to downplay or deny such events to dismiss genuine reports as simply more AI-generated fabrications.

The dangers of AI slop extend beyond depictions of war. Researchers have noted a rise in AI-generated hate content, echoing warnings from the UN about the potential for AI to amplify harmful ideologies, including anti-Semitism, Islamophobia, racism, and xenophobia. The ease and low cost of creating large quantities of convincing misinformation using generative AI poses a serious threat to social cohesion and could exacerbate existing societal tensions. The ability to mass-produce tailored misinformation, designed to resonate with specific demographics and amplify existing biases, represents a significant escalation in the challenges posed by online disinformation.

Social media platforms have implemented guidelines for labeling AI-generated content, but effective moderation remains a significant hurdle. The sheer volume of content generated, coupled with the limitations of machine learning in reliably detecting manipulated images, necessitates enhanced strategies. While platforms employ automated systems and rely on user reporting, the responsibility for identifying and flagging AI-generated content should be a shared effort. Platforms need to invest in more robust detection and labeling mechanisms, while users should be empowered with the tools and knowledge to critically assess online content. Fostering a culture of critical thinking and media literacy is crucial in mitigating the impact of AI slop and other forms of online misinformation. Educational initiatives promoting digital literacy, critical thinking, and source verification are essential in combating this growing threat.

Addressing the challenge of AI slop requires a multi-faceted approach. Social media platforms should prioritize the development of more robust detection and labeling mechanisms, including digital watermarking for AI-generated content. Swift takedowns of content identified as misinformation are also crucial. In parallel, empowering users with the skills to critically evaluate online content is paramount. This includes promoting digital literacy, emphasizing source verification, and fostering a culture of critical thinking. The fight against AI slop and its potential to distort reality and fuel misinformation requires a collective effort from platforms, users, and educators. Only through collaboration and a commitment to media literacy can we navigate the complexities of the digital age and ensure access to reliable information.