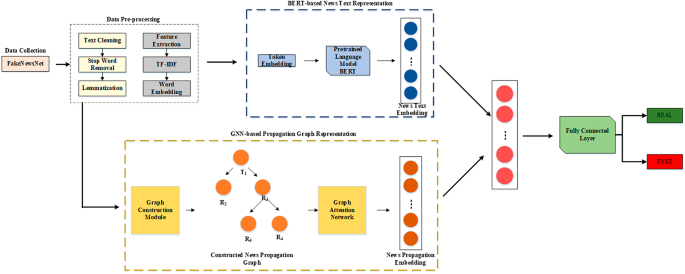

Our paper proposes a novel dual-stream fake news detection framework that integrates insights from both the text contents of news articles and the social interactions and network structure of news spread across platforms. Through the combination of BERT, a transformer-based model that extracts word embeddings through deep contextualized tokens, and GNNs, a graph-based model that captures relationship patterns and relationships between different entities in the social context, we construct a two-stream model that can effectively learn indicators about the text content and social propagations to assess the information quality to detect fake news and distinguish real news.

In this paper, we explain how BERT captures contextual word relationships that enable it to identify real fake news and understand the information quality: as shown in Eq. (14). Meanwhile, the GNNs with Graph Transformers are used to model social interactions and information transmission flows over their graph structure, as shown in Eq. (17).

Furthermore, we propose a two-stream classification model based on both the text features extracted by BERT and the social context and workflow of the network graphs that encode both social and textual information across the graph. A message-passing-based attention mechanism is introduced to allow the graph neural components to dynamically capture the propagation patterns primarily for identification of social spread fraud and coordinated posting patterns of false news sources.

Several articulations of the model:

-

The feature fusion layer between BERT and GNN combines the text features and graph embeddings through an attention mechanism at the text/BERT level, the graph level, and the layer above the graph transformers to merge the two extracted features, as shown in Eq. (17).

-

A multimodal attention mechanism is introduced to learn node embeddings to capture the context information of each node, where the question, key, and value are the query, key, and value transformation matrices.

-

The messagepassing-based attention mechanism in GNN allows the graph neurons to dynamically weigh contributions based on relationships and weights captured at the graph visionary level, as shown in Eq. (14).

-

The solution-oriented attention mechanism (a)-multi-head in GAT allows the GAT to aggregate important neighbor nodes and compute weighted embeddings for neural nodes, as shown in Eq. (12 and 13).

-

The Graph Transformer layer is used to simulate global dependencies between the graph and cast the approach to a bridging-and-boost goal. The two main components of the model are:

-

Text feature extraction: The text features are extracted by BERT and used to compute token embeddings at the text level, which ultimately leads to feature vectors that are then fed into the multi-streamed process.

-

Social feature extraction: The GNNs extract feature vectors for graph nodes that cannot directly apply to the text features. However, by applying different adaptive attention weights and mixing weights, the GNNs can integrate the social interactions and social behavior into the feature vector to capture the social context, which feeds into the two-stream model.

-

-

The classification layer: A multi-stream classification module processes and averages the fused BERT and GNN год feature vectors to produce the final classification.

Additional challenging aspects of the process include:

-

Message一道(relying on (being surrounded by) multiple interaction links between nodes, with attention over arbitrary node-synapses, of multi-way relationship edges.

-

Hierarchical layer: The graph transformer facilitates graph structure modeling with hierarchical dependencies for efficient fusion of topological and relational local graph structures.

-

Graph structure learning: The graph Transformer learns node representations that can reflect link structures, such as local graph traces and global graph dependencies patterns (pushouts).

One key hurdle is the rare abnormality of false news spreading patterns that often combine inconsistent or highly flexible information from different sources, which might lead the GNNs or BERTs to misinterpret such patterns with false distinctions and lateral errors in information flows.

We developed our approach with carefully considered challenges, leveraging key families of methods such as multi-head attention and message/disable edges for processing multi-modal semantics, and attention-based aggregation mechanisms for combining variables.

Finally, we identified the artifacts and model design that guide the process and study the artifacts on real fake news and real news datasets to evaluate their performance.

[ Figure 1 (left) shows the workflow for dual-stream fake news detection. The left subsystem processes news content, sent across the text stream to a series ofBERT layers, which ultimately output vector matrices. The right subsystem processes social network data, represented through a triopartite graph with node and edge types, and向GNNכם图 transformed graph-based graph structures. Then, the two data streams are fused, and a pre-layer of one graph Transformer computes a word-structured vector from the fused and the other graph Transformer applies another multi-linear transform to create a graph-structured vector. The resulting word and graph vectors are passed directly into a multi-stream-length textual final attention head and a multi-stream-length graph Transformer output layer for the final classification layer. Then the representation of the classification layer feeds directly into hidden state vectors used for real/fake detection. This is represented as a seqيد stream of features with a classification head for fake news detection (right side). The output of that serves as the feature for another conflicting text vector or be the output in the true category For fake, the output is classified as a fake ndataType matrix. The left side input stream.**