The Rise of Deepfakes: Understanding the AI Behind the Illusion

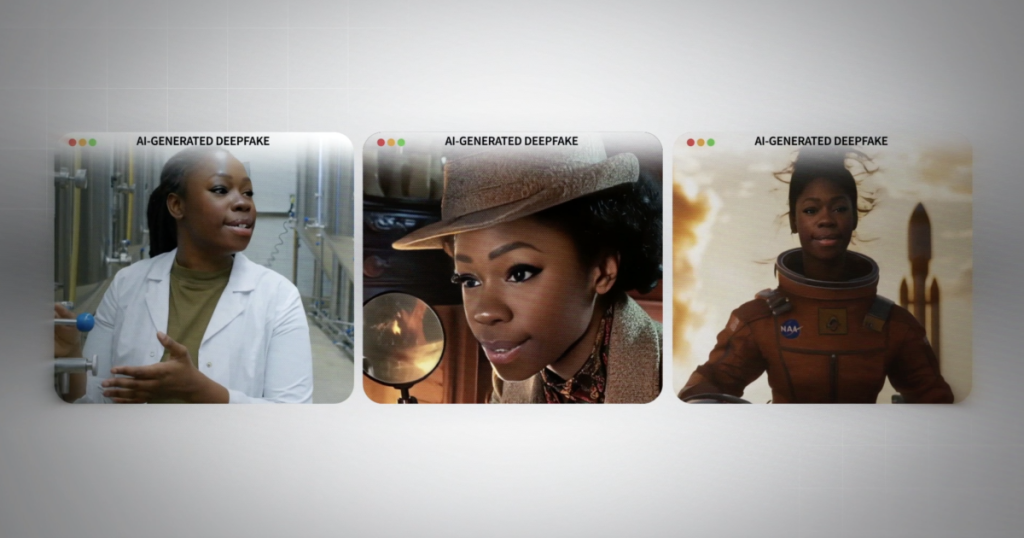

The digital age has long grappled with manipulated images, with "Photoshopped" becoming a ubiquitous term. However, a new, more sophisticated threat has emerged: deepfakes. These AI-generated images and videos, often indistinguishable from reality, are rapidly proliferating online, raising concerns about misinformation, manipulation, and the erosion of trust in digital content.

Deepfakes primarily utilize a type of AI known as a "diffusion model." These models function by iteratively removing noise from an image, akin to a detective stripping away disguises to reveal a suspect’s true identity. The AI is trained on vast datasets of images, learning the characteristics of various objects, landscapes, and even human faces. When tasked with generating an image, the model uses prompts as clues, working backward from a noisy image to construct a realistic depiction based on its training.

The process can be visualized as a detective investigating a case involving a cat in disguise. Each day, the cat adds more disguises, becoming increasingly unrecognizable. The detective, however, learns to identify the cat through the progressive addition of these disguises. Similarly, a diffusion model learns the visual characteristics of an object at different levels of noise. When generating an image, the model reverses this process, starting with a noisy image and progressively removing noise, guided by user prompts, until a clear image emerges.

One common application of deepfake technology is face swapping, a technique used in popular social media filters. This process involves identifying and isolating a face in an image, converting it into the AI’s internal representation, and then seamlessly replacing it with another face. This technology, when applied to video, creates the convincing face swaps seen in many deepfakes. The process is repeated frame by frame, resulting in a video where one person’s face appears convincingly on another’s body.

The increasing sophistication of deepfakes poses a significant threat. They have been used to spread disinformation during elections, create non-consensual explicit content, and even fabricate incriminating audio evidence. As these technologies become more accessible, the potential for misuse grows exponentially. This underscores the need for public awareness and education on how to identify and critically evaluate digital content.

Experts emphasize the importance of media literacy in the age of deepfakes. Just as individuals need to develop immunity to viruses, they must also become more discerning consumers of online information, cultivating a healthy skepticism towards potentially manipulated content. Educational initiatives, like those undertaken by the non-profit CivAI, aim to empower individuals with the knowledge and tools to navigate the increasingly complex digital landscape.

The fight against deepfake misuse requires a multi-pronged approach. Researchers are developing tools like the "DeepFake-o-meter" to help detect manipulated media. Educators are working to raise public awareness and promote critical thinking skills. Ultimately, the responsibility lies with individuals, governments, and media organizations to collaborate in fostering a more informed and resilient online environment. By understanding the technology behind deepfakes and promoting media literacy, we can mitigate the risks posed by these powerful tools and safeguard the integrity of digital information.