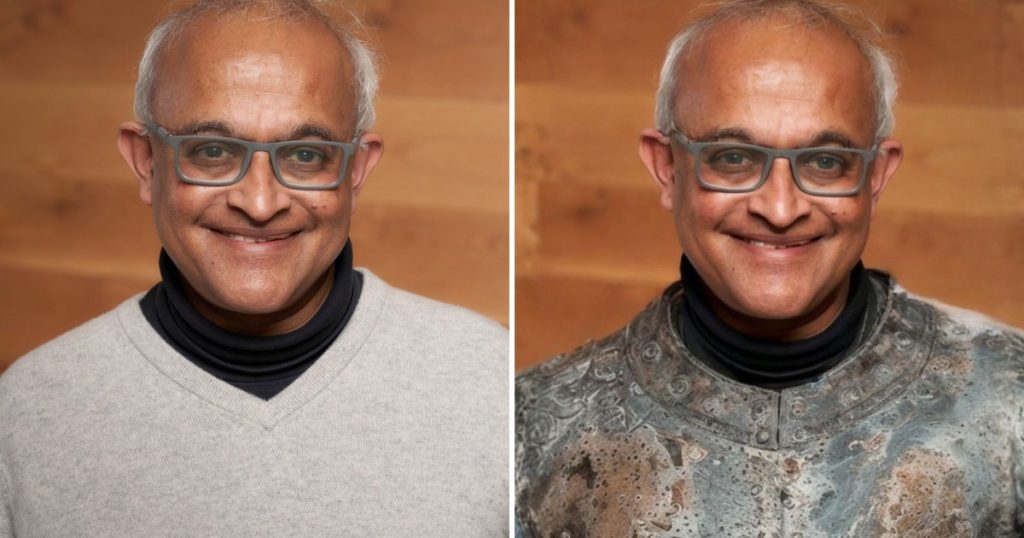

The Double-Edged Sword of Deepfakes: Navigating the Benefits and Dangers of AI-Generated Content

Deepfakes, the AI-generated or modified digital artifacts ranging from images and videos to audio recordings, have become increasingly sophisticated, often blurring the lines between reality and fabrication. While their potential for misuse has garnered significant attention, often associated with disinformation campaigns, hoaxes, and fraudulent activities, the underlying technology also possesses a range of positive applications that remain largely unexplored in public discourse. From recreating the voices of deceased loved ones to creating deceptive strategies in counterterrorism, the potential of deepfakes extends beyond the realm of manipulation. This duality presents a complex challenge: how to harness the beneficial aspects of this technology while mitigating the risks posed by its malicious applications.

The negative implications of deepfakes are readily apparent. Their ability to convincingly fabricate events and statements has been exploited to spread misinformation, damage reputations, and influence public opinion. The increasing accessibility of deepfake creation tools exacerbates this concern, empowering individuals with minimal technical expertise to generate deceptive content. Consequently, the public faces an escalating challenge in discerning authentic content from fabricated narratives, eroding trust in online information and potentially destabilizing social and political discourse.

However, the narrative surrounding deepfakes is not solely one of deception. The same technology that can fabricate reality can also be used for constructive purposes. In the entertainment industry, deepfakes can facilitate the creation of innovative visual effects and immersive experiences. They can also be employed in educational contexts, allowing for interactive historical reenactments and personalized learning experiences. Furthermore, deepfakes hold potential in therapeutic settings, offering the possibility for individuals to interact with digital representations of deceased loved ones, potentially aiding in the grieving process. The challenge lies in fostering responsible development and application of this technology, ensuring that its beneficial potential is realized while mitigating the risks of misuse.

Recognizing the urgent need to address the potential harms of deepfakes, researchers are actively developing detection methods. Professor V.S. Subrahmanian, a leading expert in AI and security at Northwestern University, has spearheaded the development of the Global Online Deepfake Detection System (GODDS), a platform designed to identify deepfake content. While currently accessible to a limited group of verified journalists, GODDS represents a significant step towards equipping individuals and organizations with the tools to combat the spread of misinformation. Professor Subrahmanian emphasizes the importance of a multi-pronged approach, combining technological solutions with critical thinking and media literacy skills.

In the absence of widespread access to sophisticated detection tools like GODDS, individuals can adopt practical strategies to protect themselves from deepfake deception. Professor Subrahmanian advises maintaining a healthy skepticism towards online content, questioning the source and veracity of information before accepting it as fact. Scrutinizing visual and auditory details for inconsistencies, such as unnatural body movements, distorted reflections, or audio glitches, can also help identify potential deepfakes. Recognizing and challenging personal biases is crucial, as deepfakes often exploit pre-existing beliefs to enhance their persuasiveness. Seeking diverse and reputable sources of information is essential to counter the effects of filter bubbles and echo chambers, which can reinforce confirmation bias and increase susceptibility to manipulation.

Finally, individuals can take proactive steps to protect themselves from personalized deepfake attacks, such as voice cloning scams. Establishing unique authentication methods with close contacts, based on shared experiences and inside knowledge, can prevent fraudsters from impersonating loved ones and exploiting emotional vulnerabilities. While social media platforms are making efforts to address the proliferation of deepfakes, their ability to effectively moderate content while respecting free speech principles remains a complex challenge. Ultimately, combating the spread of deepfakes requires a collective effort, encompassing technological advancements, media literacy education, and proactive individual measures. By fostering critical thinking, promoting responsible technology development, and empowering individuals with the tools to discern truth from fabrication, we can navigate the complex landscape of deepfakes and harness their potential for good while mitigating the risks they pose.