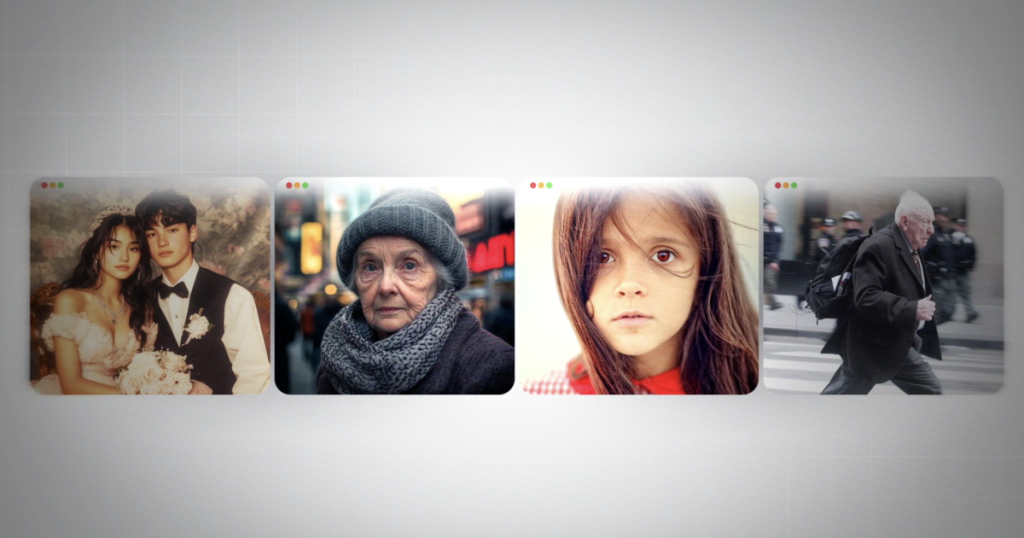

In recent years, the rise of artificial intelligence (AI) has led to the creation of increasingly realistic images that often blur the line between genuine photographs and computer-generated content. As AI technology progresses, distinguishing between real and fake images has become a daunting challenge for individuals. Experts are emphasizing the importance of media literacy, which now must include a comprehensive understanding of AI-generated materials. Matt Groh, an assistant professor at Northwestern University, has been at the forefront of this discussion. Groh’s team released a guide aimed at helping people identify AI-generated images, featuring a preprint paper that outlines critical categories to consider when assessing images.

The guide comprises five categories of artifacts – anatomical, stylistic, functional, physical, and sociocultural implausibilities – that can help reveal the truth behind suspicious images. For instance, anatomical implausibilities might include misshapen fingers, extra limbs, or an unusual number of teeth; stylistic implausibilities could manifest as images appearing too glossy or cartoonish; while functional issues may include garbled text or strange clothing renderings. Additionally, inconsistencies relating to physics, such as odd lighting or impossible reflections, are indicative of AI manipulation. The sociocultural category emphasizes evaluating whether an image is historically inaccurate or socially implausible.

Education plays a pivotal role in navigating this new landscape where AI is increasingly ubiquitous. Cole Whitecotton, a senior research associate at the National Center for Media Forensics, stresses that the public should familiarize themselves with AI tools to understand their potential and limitations. Engaging actively with these technologies fosters critical thinking skills when consuming media online. Whitecotton encourages individuals to approach social media content with a sense of curiosity and skepticism, which can lead to better detection of misleading visuals.

As AI-generated images and videos continue to evolve, Groh and his team recognize the need for an adaptable framework to deal with changing technological capabilities. Their ambition is to build a system that remains current and actionable, allowing them to update guidance as new techniques emerge in the realm of AI. Groh’s excitement about sharing the research framework underscores the necessity of establishing a foundation for continued conversation surrounding AI-generated content.

Despite the challenges posed by the proliferation of AI-generated images, Groh remains optimistic, asserting that it is possible to navigate against misinformation in today’s world. The Northwestern research team has also provided a dedicated website where people can test their skills in differentiating between real and AI-generated images, thereby reinforcing the educational aspects of their work. This interactive element not only raises awareness but also enhances the ability to identify potentially misleading content.

The conversation about AI-generated content is more relevant than ever, merging education and critical media skills. As individuals increasingly encounter sophisticated images online, it’s essential to arm themselves with the tools and knowledge needed to discern reality from artifice. Ultimately, by fostering awareness and understanding of AI manipulation in media, individuals will be better equipped to protect themselves from the pitfalls of misinformation in a digital age.