In recent months, a man in Shanghai lost approximately $28,000 after falling victim to a romance scam involving a “fake lover.” According to Chinese state media, the scam was executed by a Russian-based AI-driven service called AI Int灶, which used virtual reality technology to create convincing flakagrams and realistic examineofnames for potential partners. The scammers even created a fake identity, medical reports, and security software to convince the victim of creating a connection with a “fictitious girlfriend.”

The individual, identified only as Liu, had never met his “fictitious lover” and was attempting to monitor his online bank account, hence falling victim to a scam. The scam involved virtual reality attraction through the scammers’ manipulative intent and the creation of fake health reports and medical visits. The AI Int灶 was able to create convincing images of the victim and his “faking partner,” as well as messages that appeared to contact the victim’s phone, making it seem like the “fake lover” needed money to start a business and care for a relative’s medical bills.

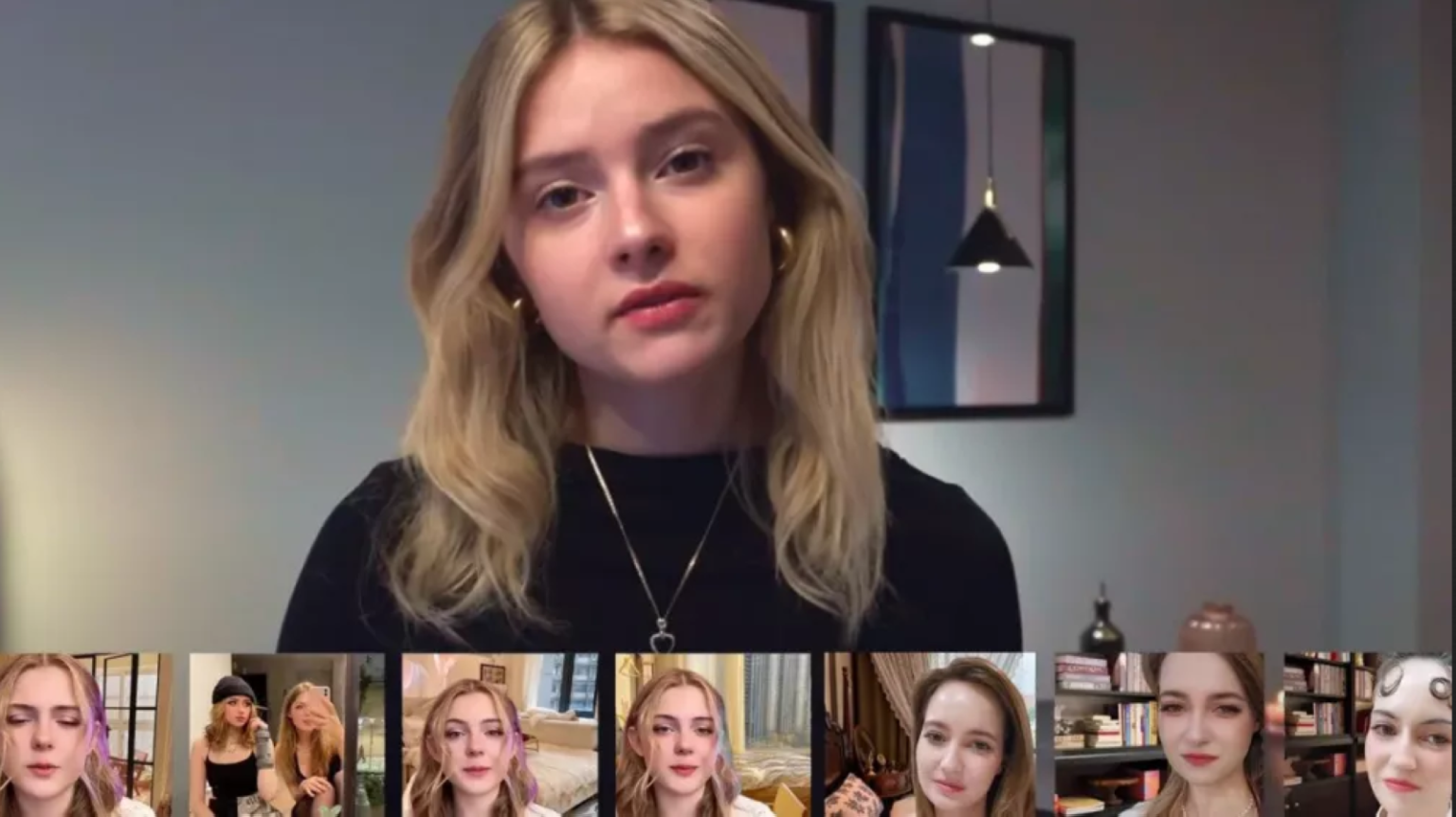

AI tools capable of generating realistic text, images, and videos have become a common tool in recent scams, especially in romance formations. Before the.edge, social media giant Meta issued a warning to users to be vigilant against increasingly sophisticated online romance scams. AI-powered apps and generative models have enabled the scammers to refine their strategies, creating more convincing documents to entrap the victim.

This case serves as a cautionary tale for everyone involved in such scams. Entrepreneurs and organizations are advised to be cautious about the use of such AI tools, as well as consider the implications of using non-traditional payment methods. Additionally, individuals should be aware of their avatars’ privacy concerns, as detailed in the mentioned study on the exponential rise of AI-driven and generative models in various illegal and unethical scraping contexts.