The Evolving Landscape of Synthetic Media Detection: Navigating the Challenges of AI-Generated Content

The rapid advancement of generative AI has ushered in an era of increasingly realistic synthetic media, blurring the lines between real and artificial content. While some AI-generated outputs still bear detectable traces of their artificial origins, the current level of verisimilitude represents only the starting point. As these technologies evolve, discerning authentic media from synthetic creations will become increasingly complex. Organizations like WITNESS are working on solutions, including promoting transparency in AI production and developing provenance and authenticity techniques, but even these require further refinement to address crucial issues like resilience, interoperability, and widespread adoption.

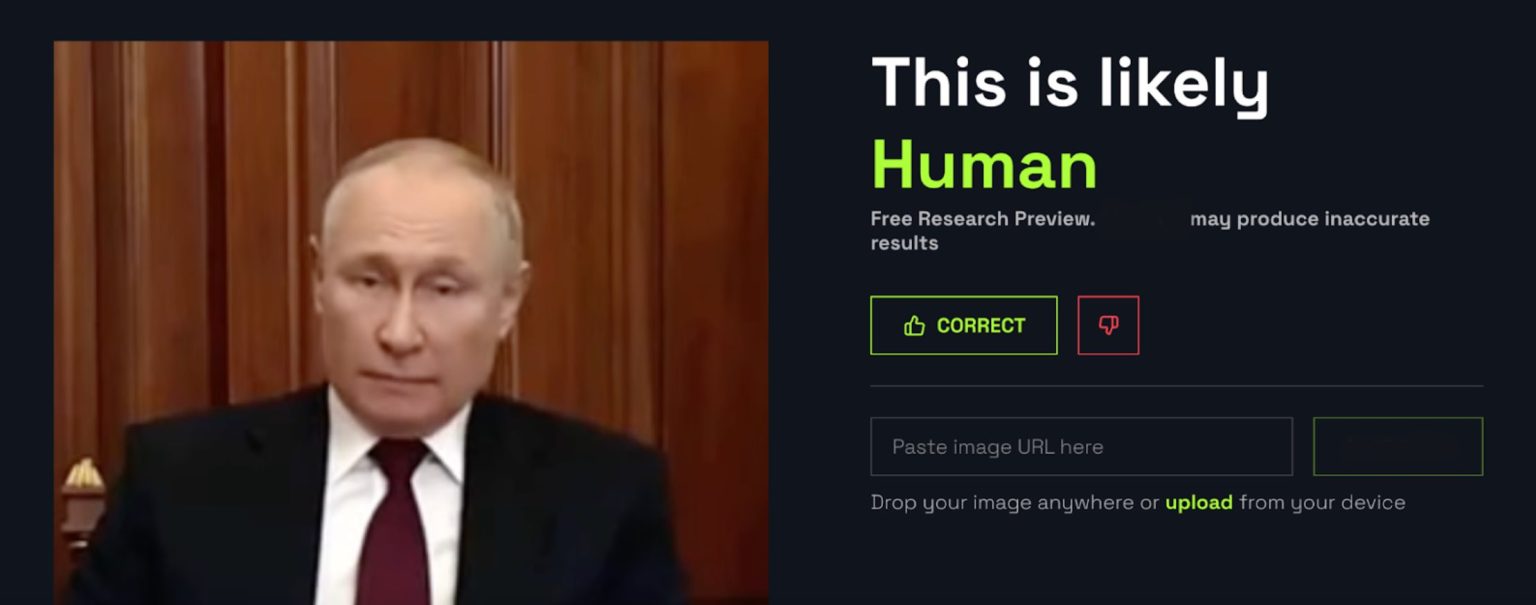

Evaluating the Effectiveness of Publicly Available AI Detection Tools

A crucial strategy in combating the proliferation of synthetic media is the development of AI detection tools. These tools aim to identify AI-generated or manipulated audio and visual content without relying on external context or corroboration. This article explores the capabilities and limitations of several publicly accessible detectors, offering insights into their accuracy, interpretability, and vulnerabilities based on tests conducted in February 2024. While these tools hold promise, they are not a foolproof solution and must be used with caution and a nuanced understanding of their limitations. The tools tested include Optic, Hive Moderation, V7, Invid, Deepware Scanner, Illuminarty, DeepID, and an open-source AI image detector.

Interpreting the Results of AI Detection Tools

The output of AI detection tools requires careful interpretation to avoid mischaracterization. Results are often presented as confidence intervals or probabilities (e.g., 85% human) or as binary yes/no determinations. Understanding these results requires knowledge of the detection model’s training data, the specific types of AI manipulations it is designed to detect, and its last update. Unfortunately, most online tools lack transparency regarding their development, making it challenging to assess the reliability of their output. It’s crucial to remember that even a negative result from a detector does not guarantee authenticity, as the content may still be synthetic or manipulated in ways undetectable by the tool. Further investigation using open-source intelligence techniques (OSINT), such as reverse image search and geolocation, can provide additional context and corroboration.

Factors Influencing the Accuracy of AI Detection Tools

The accuracy of AI detection tools is heavily reliant on the quality, quantity, and diversity of their training data. A tool trained on a specific type of synthetic media, such as deepfakes of public figures, may struggle to identify manipulations created using different techniques or targeting less prominent individuals. Similarly, a model trained on clear audio recordings might be less effective with noisy or overlapping audio. This dependence on specific datasets limits the generalizability of these tools and underscores the need for continuous updates and retraining as generative AI techniques evolve. For instance, a tool designed to detect GAN-generated images may misclassify content created using diffusion models.

Circumventing AI Detection: Manipulation and Evasion Techniques

Detection tools are vulnerable to various manipulation techniques that can lead to false negatives. Simple edits like cropping, scaling, or reducing resolution can deceive detectors, even with known synthetic content. Furthermore, AI-generated content can be intentionally designed to mimic real-world imperfections, such as blur and motion artifacts, to evade detection. Advanced editing techniques like in-painting and out-painting, which seamlessly alter or extend images, further complicate detection efforts, especially when applied to real images. Even seemingly benign actions like file compression or recording an AI-generated audio clip can degrade quality and remove crucial metadata, hindering the detector’s ability to accurately assess the content’s origin.

Best Practices for Utilizing AI Detection Tools in Verification Efforts

Despite their limitations, AI detection tools can be valuable assets in the verification process when used judiciously and in conjunction with other investigative methods. Journalists, fact-checkers, and researchers should approach these tools with a healthy skepticism, recognizing their vulnerability to manipulation and the dynamic nature of AI technology. It’s essential to thoroughly investigate the tool’s development process, understand its limitations, and interpret its confidence levels carefully. Transparency in reporting is crucial, clearly outlining the tools and methods employed, their limitations, and the rationale behind any conclusions drawn. Furthermore, users should be aware of the privacy and security implications of submitting content to online detection platforms. The rise of plausible deniability, where any content can be dismissed as a potential deepfake, underscores the complexity of navigating this evolving landscape.

Navigating the Future of Synthetic Media Detection

The cat-and-mouse game between generative AI and detection tools demands constant vigilance and innovation. As AI technologies become increasingly integrated into everyday software and creative processes, the task of identifying synthetic media will only become more challenging. The development of more sophisticated detection techniques is imperative, as is a holistic approach to addressing the broader implications of synthetic media across the information ecosystem. This includes promoting media literacy, fostering transparency in AI development, and developing robust provenance and authentication mechanisms. The future of synthetic media detection hinges on a multi-faceted approach that combines technological advancements with critical thinking, ethical considerations, and a commitment to upholding the integrity of information.