Introduction

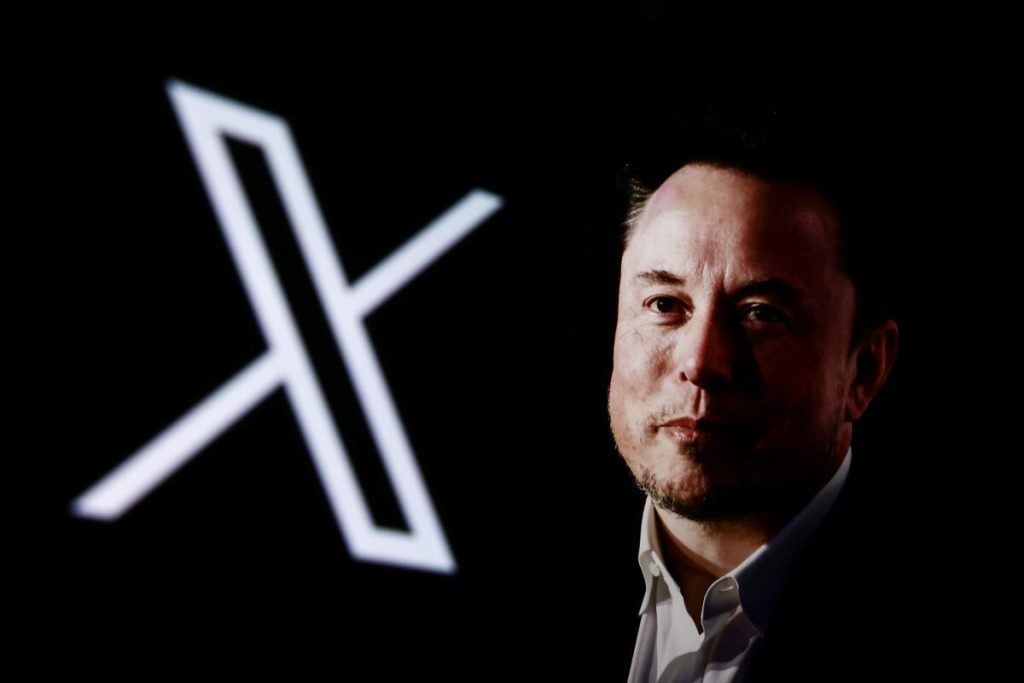

The Digital Democracy Institute of the Americas (DDIA) conducted a recent study examining X’s Community Notes system, a user-driven fact-checking initiative. The study aimed to assess the effectiveness of this system in combatting misinformation and addressing its implications for misinformation spread on social media platforms.

Dissemination Issues and Bot Activity

The study revealed significant challenges in the Community Notes system. Over 90% of posts were not made public, with data showing a decline in user-generated content from 2021 to 2025. Specifically, 9.5% of English-language notes in 2023 and 4.9% in 2025 were publicly visible. Notably, Spanish-language notes saw a small improvement, while the largest contributors were often bot-generated, not user-led. automated systems, known for their automated nature and the potential for generating false content, represented the dominant force behind these posts.

Challenges in Approval Process

The Review found issues with the system’s Peer Review mechanism. X assigned automated users to submit notes, raising concerns about the quality and validity of the content generated. The algorithm prioritized user votes based on diverse political biases over factual accuracy, creating an ethical dilemma: fact-checking content may escalate, while misinformation can take longer to acknowledge and spread despite known alternatives.

Impact on Misinformation and Comparisons

The lack of effective peer review, prioritized over factual accuracy, hindered the elimination of misinformation. Case studies of conflict scenarios, such as the Israel-Hamas conflict, demonstrate how false content persisted, despite accurate information available from reputable sources. Traditional fact-checking methods, which integrate professional organizations and active engagement with users, are more effective in addressing misinformation. The Community Notes system’s reliance on user-generated content without the rigorous oversight of traditional methods was seen as a flaw.

Recommendations for Improvement

To enhance the Community Notes system, several actions are proposed: 1) Incorporate Professional Fact-Checkers to ensure factual accuracy. 2) Improve Transparency tolassify notes and designate users with evaluation guidelines. 3) Address Bot activity through measures to detect and mitigate their impact. 4) Adjust Algorithm priorities to balance accuracy over ideology. These recommendations aim to maintain the system’s effectiveness while addressing its challenges.

Conclusion

Despite its success in some contexts, the Community Notes system on X appears to lag in addressing misinformation compared to more robust traditional methods. The dominance of automated bots, a problem with content generation focusing on false statements, underscores the need for reforms. While the system allows some名片 of content for lesser scrutiny, it also highlights the potential for misinformation to persist longer. Addressing bot activity, enhancing transparency, and prioritizing factual accuracy could be key to improving the system’s effectiveness in combating misinformation.