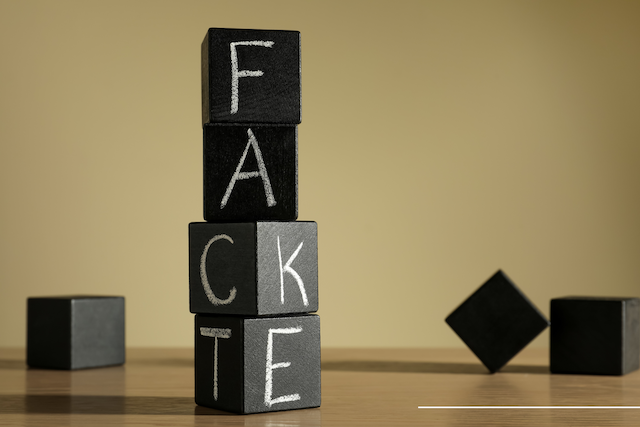

The Rise of Generative AI and the Erosion of Truth: Navigating the New Era of Misinformation

The digital landscape is undergoing a seismic shift, fueled by the rapid advancement of Generative Artificial Intelligence (GenAI). This powerful technology, capable of creating realistic images, videos, and text with unprecedented ease, has democratized content creation while simultaneously blurring the lines between reality and fabrication. The recent removal of third-party fact-checkers from major social media platforms, coupled with the proliferation of AI-generated content, has created a perfect storm for the spread of misinformation. This convergence of factors underscores the urgent need for new strategies and heightened critical thinking skills to navigate this evolving digital world. The case of an AI-generated image of a house unscathed amidst the devastating Los Angeles wildfires serves as a stark reminder of GenAI’s potential to deceive and manipulate.

The accessibility of GenAI tools has empowered individuals with creative abilities previously limited to professionals. However, this democratization of content creation has a dark side: the potential for malicious actors to exploit these tools for nefarious purposes. The creation and dissemination of convincing yet entirely fabricated visual content has become increasingly simple, posing a significant threat to the integrity of information online. This ease of manipulation has far-reaching implications, potentially influencing public opinion, undermining trust in institutions, and even inciting social unrest. Experts warn that the dangers of AI-generated misinformation are particularly acute during times of crisis, such as natural disasters or elections, when accurate information is paramount. The rapid spread of these fabricated visuals on social media platforms often outpaces debunking efforts, allowing false narratives to take root and spread rapidly.

The sophistication of GenAI poses a significant challenge to traditional methods of content verification. What once seemed like futuristic technology is now readily available, enabling the creation of images and videos virtually indistinguishable from reality. This has rendered traditional safeguards, like watermarks, largely ineffective. AI-powered tools can easily erase these markers, facilitating the manipulation and repurposing of copyrighted material. Ironically, the very devices we use daily often promote AI-enhanced editing features as desirable selling points, further normalizing the technology and blurring the lines between authentic and fabricated content. The implications for professions reliant on visual authenticity, such as graphic design, are profound, with automation threatening to disrupt traditional creative roles and raising concerns about job displacement.

Identifying AI-generated content is a complex and evolving challenge. While researchers are working on sophisticated detection techniques, including machine learning algorithms that analyze images for anomalies in color, lighting, shadows, and textures, no foolproof method currently exists. Initiatives like The Coalition for Content Provenance and Authenticity (C2PA) are striving to establish industry standards for verifying digital content, such as embedding digital watermarks and hidden patterns in AI-generated images. However, even these advanced methods face limitations. Watermarks can be removed, and metadata – the digital information embedded in images – can be altered, making it difficult to definitively establish provenance.

For the average user, discerning real from fake requires a combination of vigilance and skepticism. Experts recommend examining images for telltale signs of AI manipulation, such as unnatural facial features, inconsistent backgrounds, composition errors, and digital artifacts like pixelation or over-smoothing. Symmetrical faces that appear too perfect, backgrounds with mismatched lighting or textures, and objects that seem unnaturally placed within a scene can all be indicators of AI manipulation. Developing a critical eye for these subtle inconsistencies is crucial in the fight against misinformation. Furthermore, employing a systematic approach to evaluating online content, such as the SIFT method (Stop, Investigate the claim, Find better coverage, and Trace sources to their original context), empowers individuals to make informed judgments about the information they encounter.

In this new era of readily accessible AI-powered manipulation, critical thinking emerges as a fundamental skill for navigating the digital landscape. The ability to question, analyze, and verify information is more crucial than ever. A healthy dose of skepticism, particularly when encountering sensational or provocative content, is essential. Cross-referencing information with reputable sources and seeking out multiple perspectives are vital strategies for discerning fact from fiction. The absence of third-party fact-checkers on major platforms places the onus of verification squarely on the individual, underscoring the importance of media literacy and critical thinking skills. The responsibility for safeguarding truth in the digital age rests not solely on technological advancements, but also on the informed and discerning minds of its users. Cultivating a culture of critical thinking and media literacy is paramount in combating the spread of misinformation and preserving the integrity of information in an increasingly AI-driven world.