Meta’s Shift in Misinformation Strategy: From Fact-Checking to Community Notes

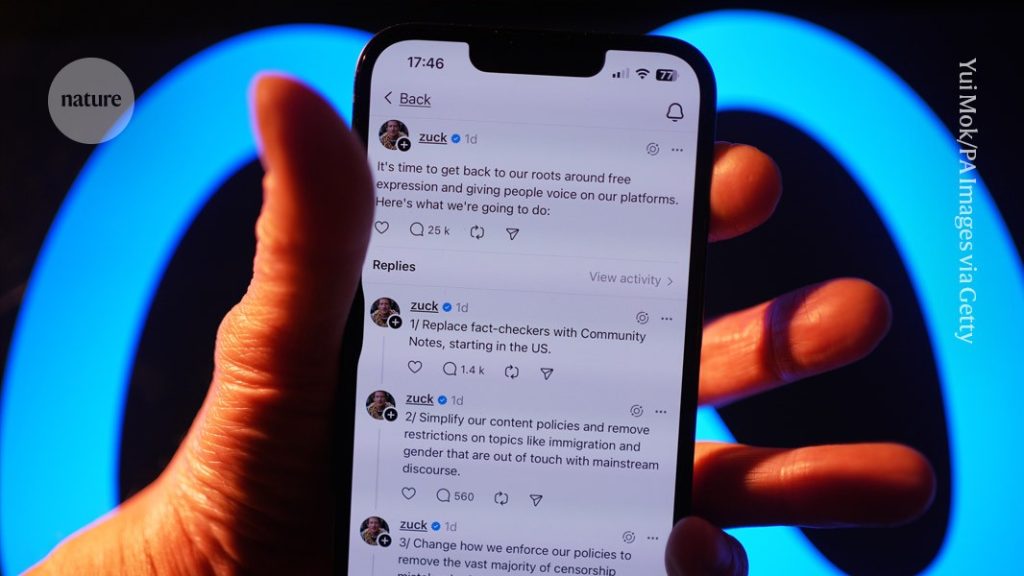

In a significant shift in its approach to combating misinformation, Meta, the parent company of Facebook, has announced its intention to discontinue its third-party fact-checking program. Established in 2016, this program relied on independent organizations to verify the accuracy of selected articles and posts on the platform. Meta now plans to replace this system with a community-driven approach, mirroring the "Community Notes" feature on X (formerly Twitter). This decision has sparked debate about the effectiveness of fact-checking, the potential biases within these programs, and the implications of relying on community-based moderation.

Meta justifies its decision by citing concerns about political bias and censorship within the fact-checking system. Joel Kaplan, Meta’s chief global affairs officer, argued that experts, like everyone else, possess their own biases, which can influence their selection of content for fact-checking and the manner in which they conduct their assessments. This statement raises questions about the inherent challenges of maintaining objectivity in the fight against misinformation, especially in the politically charged digital landscape.

Proponents of fact-checking argue that these initiatives have a demonstrable positive impact on public perception and trust in information. Research suggests that fact-checking can effectively reduce misperceptions about false claims. A meta-analysis of fact-checking studies found a positive influence on political beliefs, supporting the argument that these programs can help correct misinformation and promote more informed public discourse. While fact-checking may not prevent misinformation entirely, it can mitigate its spread and impact after it has entered the public domain.

However, the effectiveness of fact-checking is not uniform across all issues. It tends to be less effective when dealing with highly polarized topics, such as Brexit or the US elections. Individuals with strong partisan affiliations often resist information that challenges their pre-existing beliefs, rendering fact-checks less impactful in these contexts. This raises concerns about the limitations of fact-checking in bridging divides and fostering consensus in deeply divided societies.

Despite its limitations in highly polarized settings, fact-checking can still provide valuable benefits. Flagging false or misleading content on platforms like Facebook can reduce its visibility and discourage its spread. Even if it doesn’t change the minds of staunch believers, it can prevent misinformation from reaching a wider audience. Furthermore, the presence of fact-checks within the information ecosystem can have broader ripple effects, influencing the perception and behavior of users beyond the direct impact on those who encounter the fact-checked content. These indirect effects are often difficult to measure but can contribute to a more informed and resilient digital environment.

Meta’s concerns about bias within fact-checking programs raise important questions, but the argument that right-leaning misinformation is flagged more often due to bias may not fully capture the reality. Rather, the higher frequency of fact-checks targeting right-leaning content may be a reflection of the volume of misinformation originating from that side of the political spectrum. If one ideological group generates a disproportionate amount of misinformation, it naturally follows that more fact-checks will be directed towards their content. This doesn’t necessarily indicate bias in the fact-checking process itself but rather a disparity in the production and dissemination of misinformation. Moving forward, Meta’s transition to community-based moderation with "Community Notes" introduces a new set of challenges, especially concerning the potential for manipulation and the spread of misinformation by coordinated groups. The success of this new approach will depend on the platform’s ability to foster a well-informed and discerning community of contributors who can effectively identify and flag misinformation. The shift also raises questions about the platform’s commitment to independent, expert-led fact-checking and the future of combating misinformation in the age of social media.