A Signal-Detection Framework for Combatting the Misinformation Epidemic

The digital age, while offering unprecedented access to information, has also ushered in an era of rampant misinformation, posing a significant threat to individuals and societies alike. From fabricated news stories influencing elections to false health advice jeopardizing public health, the spread of misleading information online has become a pressing global issue. Traditional approaches to combatting this "infodemic" have proven largely insufficient, highlighting the need for new, more sophisticated strategies. A recent study published in Nature proposes a novel framework based on signal detection theory, offering a promising new lens through which to understand and intervene against the proliferation of misinformation. This framework moves beyond simplistic notions of true and false information and emphasizes the complex interplay between the characteristics of misinformation, the cognitive processes of individuals encountering it, and the interventions designed to counteract its effects.

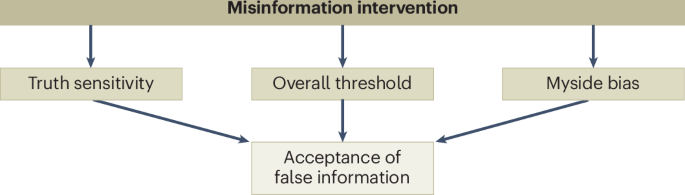

The signal-detection framework conceptualizes the problem of misinformation as analogous to detecting a faint signal amidst noise. Genuine information acts as the signal, while misinformation represents the noise obscuring its clarity. Just as a radar operator must discern a true target from background clutter, individuals navigating the online landscape must differentiate between credible information and misleading content. This framework acknowledges the inherent uncertainty in this process, recognizing that judgments about information veracity are probabilistic rather than absolute. Two key concepts underpin this approach: sensitivity, representing the ability to correctly identify and reject misinformation, and specificity, reflecting the ability to correctly identify and accept genuine information. Crucially, the framework highlights the potential trade-off between these two: increasing sensitivity (being more aggressive in flagging potential misinformation) can unintentionally lead to decreased specificity (incorrectly flagging true information), potentially suppressing legitimate speech and fostering distrust.

The strength of the signal-detection framework lies in its ability to analyze the effectiveness of various interventions designed to combat misinformation. These interventions can be broadly categorized as targeting the source, the message, or the receiver of information. Source-based interventions focus on identifying and addressing the originators of misinformation, ranging from fact-checking organizations debunking false claims to social media platforms suspending accounts spreading disinformation. Message-based interventions aim to modify the content itself, such as adding warning labels to misleading posts or promoting credible alternatives. Receiver-based interventions, meanwhile, empower individuals to better discern misinformation through media literacy training, critical thinking skills development, or nudges encouraging lateral reading and source verification.

By applying the signal-detection framework, researchers can evaluate the impact of these interventions on both sensitivity and specificity. For example, a highly sensitive intervention might successfully flag a large proportion of misinformation but might also inadvertently flag a significant amount of accurate information, reducing specificity. This could lead to a phenomenon known as the "cry wolf effect," where repeated exposure to false alarms erodes trust in the intervention and reduces its overall effectiveness. Conversely, an intervention with high specificity might minimize the incorrect flagging of true information but might fail to capture a substantial portion of the circulating misinformation, limiting its impact on the problem. The framework, therefore, encourages a balanced approach, optimizing interventions to maximize both sensitivity and specificity while minimizing unintended consequences.

Furthermore, the signal-detection framework acknowledges the crucial role of individual differences in susceptibility to misinformation. Factors such as cognitive biases, prior beliefs, and emotional state can influence how individuals perceive and evaluate information. People holding strong pre-existing beliefs, for instance, might be more likely to accept information aligning with their views, even if it lacks credibility, a phenomenon known as confirmation bias. Similarly, emotionally charged content can circumvent rational evaluation and increase the likelihood of sharing misinformation. The framework recognizes that interventions must consider these individual differences to be truly effective. Tailoring interventions to specific audiences and addressing underlying cognitive biases can significantly enhance their impact and reduce the overall spread of misinformation.

Moving forward, the signal-detection framework offers a valuable roadmap for future research and the development of more effective interventions. It emphasizes the need for rigorous empirical evaluation of existing and emerging strategies, focusing on their impact on both sensitivity and specificity. Researchers should prioritize developing interventions that not only effectively identify and counter misinformation but also minimize unintended consequences such as the suppression of legitimate speech and the erosion of trust. Moreover, future research should delve deeper into the individual and social factors influencing susceptibility to misinformation, paving the way for tailored interventions that effectively address the root causes of the problem. By adopting this comprehensive and nuanced approach, we can move closer to creating a more informed and resilient information ecosystem capable of mitigating the harmful effects of misinformation and safeguarding the integrity of public discourse.