Bishop T.D. Jakes Battles AI-Fueled Misinformation Campaign on YouTube

Prominent faith leader Bishop T.D. Jakes has found himself embroiled in a legal battle against Google, the parent company of YouTube, over the proliferation of AI-generated videos spreading false and damaging information about him. These videos, often featuring fabricated scenarios of his arrest or involvement in scandalous activities, have amassed millions of views, exploiting the public’s interest in celebrity controversies and contributing to the spread of misinformation. Jakes’ legal team alleges that YouTube has been negligent in enforcing its own policies against such content, prompting them to take legal action.

The escalating use of artificial intelligence in creating online content has raised serious concerns about the potential for manipulating public perception and disseminating false narratives. While AI technology offers exciting possibilities for creative expression and information sharing, its misuse in generating fabricated videos poses a significant threat to individuals’ reputations and the integrity of online information. Bishop Jakes’ case highlights the urgent need for platforms like YouTube to implement robust measures to combat the spread of AI-generated misinformation.

The lawsuit, filed in the Northern District of California, seeks to compel Google to reveal the identities of the individuals behind the accounts posting the defamatory videos. These accounts are reportedly based in various countries including South Africa, Pakistan, the Philippines, and Kenya, underscoring the global reach of this malicious campaign. The legal action aims to hold these individuals accountable for their actions and to deter others from engaging in similar practices. The court filing emphasizes the use of AI-generated content in creating these videos, adding a new dimension to the challenges posed by misinformation in the digital age.

This incident coincides with increased public attention on the misuse of AI technology to generate misleading content. YouTube itself has recently launched initiatives encouraging users to utilize AI tools, inadvertently contributing to the problem. The potential for financial gain further incentivizes creators to produce such videos, as they can monetize the views generated by sensationalized content. This dynamic creates a vicious cycle of misinformation, where financially motivated creators exploit public curiosity and platform algorithms to maximize their reach and profits.

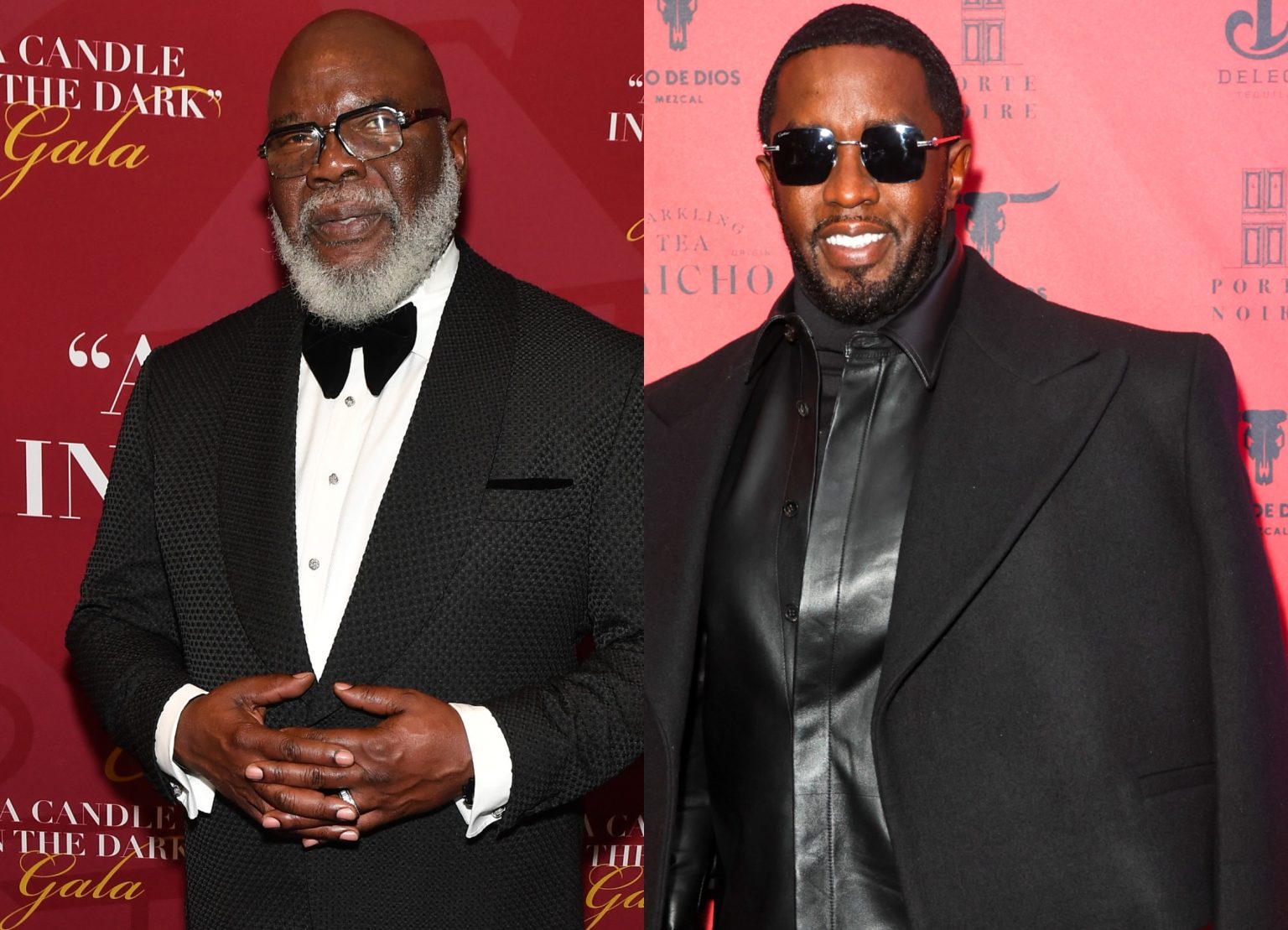

The lawsuit underscores the growing concern surrounding AI-generated misinformation and the challenges platforms face in moderating such content. The case also highlights the vulnerability of public figures to online attacks, particularly in the age of viral misinformation. Bishop Jakes’ situation is not isolated; other prominent Black figures, including Steve Harvey and Denzel Washington, have also been targeted by similar AI-generated videos containing false and damaging accusations. These videos often feature fabricated scenarios of arrests or involvement in scandals, leveraging the public’s fascination with celebrity gossip to spread misinformation.

The lawsuit brought by Bishop Jakes’ legal team represents a critical step in addressing the escalating issue of AI-generated misinformation on online platforms. It underscores the need for stronger platform accountability and more effective mechanisms to identify and remove such content. The outcome of this case could have significant implications for the future of online content moderation and the ongoing battle against misinformation in the digital age. It also serves as a stark reminder of the potential for AI technology to be weaponized for malicious purposes and the urgent need for safeguards to protect individuals and the integrity of online information. The case highlights the complex interplay between technological advancement, freedom of expression, and the responsibility of online platforms to curb the spread of harmful content. As AI technology continues to evolve, the challenge of combating misinformation will only become more complex, demanding innovative solutions and a collective effort to protect the online ecosystem from manipulation and abuse.