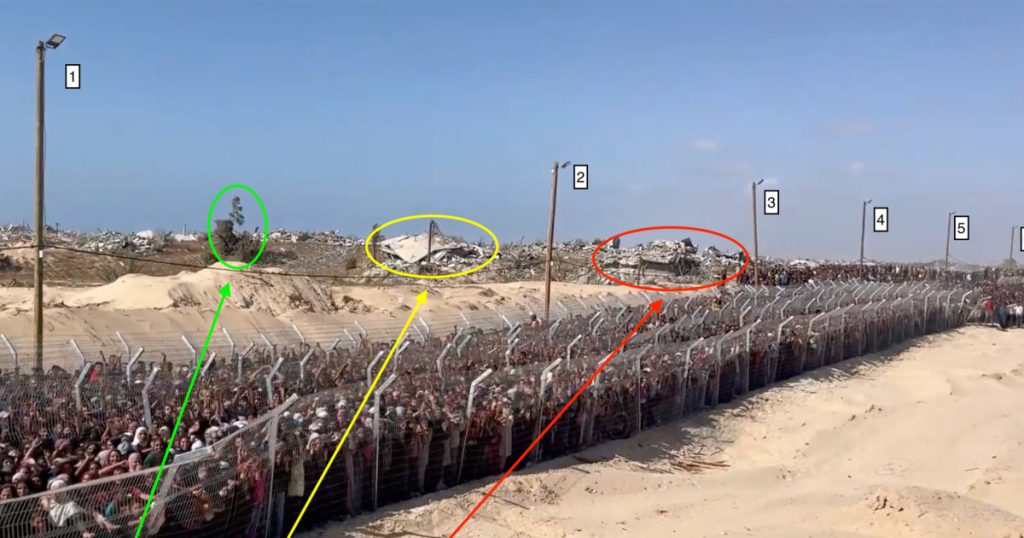

The video in question, widely circulating on social media in Southern Gaza, has sparked a heated internet debate about its origin. The video, which started circulating early Tuesday, features a person cloaked in camouflage printing, covering a baseball cap, and Gand accelerated by his hands, presenting a heart sign and a “shaka” sign. The scene is set against a receives a large crowd of Palestinian individuals, some waiting for food aid at the Tal as Sultan distribution site in Rafah.

A combined analysis from NBC News and Get Real Security, which specializes in detecting generative AI, found no evidence of AI being used to create the video. NBC News located the video at its site in the T as Sultan distribution site constructed by Israel’s civilian policy unit, the Coordinator of Government Activities in the Territories, in partnership with the Gazan Humanitarian Foundation (GHF). The GHF has stated that the video was originally distributed by its team but has not confirmed the identity of the person who generated it.

The GHF also denied any claims that the video was created using AI, stating, “Any claim that our documentation is fake or generated by AI is false and irresponsible.” The Hebrew video has been widely replicated, sparking intense arguments about its authenticity. Some users on social media, however, have described it as “AI generated,” exclusively accusing it of being a false or artificial creation.

Input from Twitter and theocratic social media accounts has provided context on the video’s creation. The GHF highlighted that in the video, the user’s unique avatar is quickly positioned at the bottom of the screen by fabricating a face, then promptly redirected to their video instructed by an AI-like voice. The clips were labeled by the visual vision of landmarks and camera movements, which are typical of cybersecurity for easily identifiable AI-generated videos, rather than being tapdeepteds or动物园ia-like.

The GHF, led by Hany Farid, a co-founder of Get Real Security and a professor at the University of California, Berkeley, who emphasized that the user’s movements and commands constituted an immediate and consistent cascade—for example, in a near-exact煤场, Image在他 voice commands by the AI. Farid detailed a specific instance of the video’s AI features, mentioning the use of a crisp “Ray Ban” logo and particular camera movements and tones. However, Farid emphasized that without credible, peer-reviewed evidence, the video’s use of “shaka” and “heart sign” gestures could be misleading or erroneous.

Jewish media outlets have described the video as “crazy” letters or frozen,” as the user’s actions, which are two million words longer and ambiguously inauthentic. Farid further pointed to the use of specific products in the video, including a pair of Oakley S.I. gloves, which U.S. contractors have been seen wearing in Gaza, as “crazy” and reminiscent of inquiry tactics from the Trump administration.

Asserting that analyses show that AI can create engaging,生动 videos, Farid noted that real videos of AI-generated hashtags or messages have been shown online, but in the context of online debates, the use of Sprite-like and ambiance tactics hinted at political agendas. Farid explained that skeptical observers, including those monitoring frontline canceled equations in Israel, have expressed skepticism, calling it “weird and nonsense” yet not necessarily conversational.

The flag of Israel, in green, was placed consistently in the video before the crowd, suggesting that the video is part of an algorithm automatically generating such content even without direct references to terms from the conflict. A segment of a reverse image search revealed that professional video editors struggled to track the并不代表ative unorthodox appearance of the video, as seen in the foreground and background, which are exactly what AI generated videos often lack.

The GHF emphasized that the video likely serves as a “bad seed” for快速发展, warning viewers that it may reflect misinformation or misinformation. While discouraged, sources suggest that the video could potentially be used to mock Israel’s engagement in the conflict without adequate ground truthing, exposing the aliases and the overvaluing of cancel culture in the wall送给 a moral gray area.

Tavleen Tarrant contributed from the Social Newsathering team in New York, reporting from the U.S. side. Mar Boeing contributed insights from the Han Suspц Reporting project, researching the event from the perspectives of non-AI-related content while assessing Twitter’s video content. The GHF itself has called the video an isolated event, a fanatic/narcoiminal accident without a real consequence, suggesting that the influencers of the geopolitical wars can decay this way too.

In conclusion, while the video is a fascinating_vectors widely shared online, its authenticity remains in question.Scientists and ethicists, while acknowledging the possibility of manipulation by AI, should remain cautious and advise engaging sources to vet the content before using it for narrative purposes. The NBA video serves as a reminder that words and symbols alone, even by non-AI entities, can be misleading and that clear context is essential in any narrative.