The Rise of Deepfake Pornography: A Disturbing Trend Targeting Young People and Fueling Legal Battles

The digital age has ushered in unprecedented technological advancements, but it has also spawned new forms of abuse, one of the most insidious being deepfake pornography. The creation of non-consensual, sexually explicit images using artificial intelligence (AI) is a burgeoning problem, leaving victims like 15-year-old Elliston Berry traumatized and prompting legal action across the country. Elliston’s experience highlights the devastating impact of this technology. After a classmate circulated an AI-generated nude image of her throughout her school, Elliston endured months of anxiety and embarrassment, her life disrupted by the malicious act. This incident is unfortunately not an isolated case. The availability of user-friendly AI programs and the rapid dissemination of these images via social media platforms create a perfect storm for this type of abuse to flourish.

The alarming growth of deepfake pornography is reflected in stark statistics. Last year witnessed a staggering 460% increase in deepfake videos online, totaling over 21,000. Websites openly promote the creation of these fake nudes, preying on individuals’ insecurities and fueling a market for non-consensual pornography. The ease with which ordinary photos can be transformed into explicit content makes everyone a potential target, blurring the lines between reality and fabrication and creating deep emotional distress for victims. The pervasiveness of this problem has triggered a multi-pronged response from law enforcement, legislators, and concerned parents.

In San Francisco, the City Attorney’s office has taken a decisive stand against this emerging threat. A lawsuit targeting the owners of 16 websites that generate deepfake nudes marks a significant step towards holding these platforms accountable. City Attorney David Chiu emphasizes that this legal action is not about technology itself but about addressing the very real sexual abuse that these sites facilitate. With an estimated 200 million visits to these 16 websites in just six months, the scale of the problem is evident, and Chiu acknowledges that this lawsuit is only the beginning of a larger effort to combat this pervasive form of online abuse and protect vulnerable individuals. The proliferation of these sites necessitates a comprehensive approach to curb their operation and hold those responsible accountable.

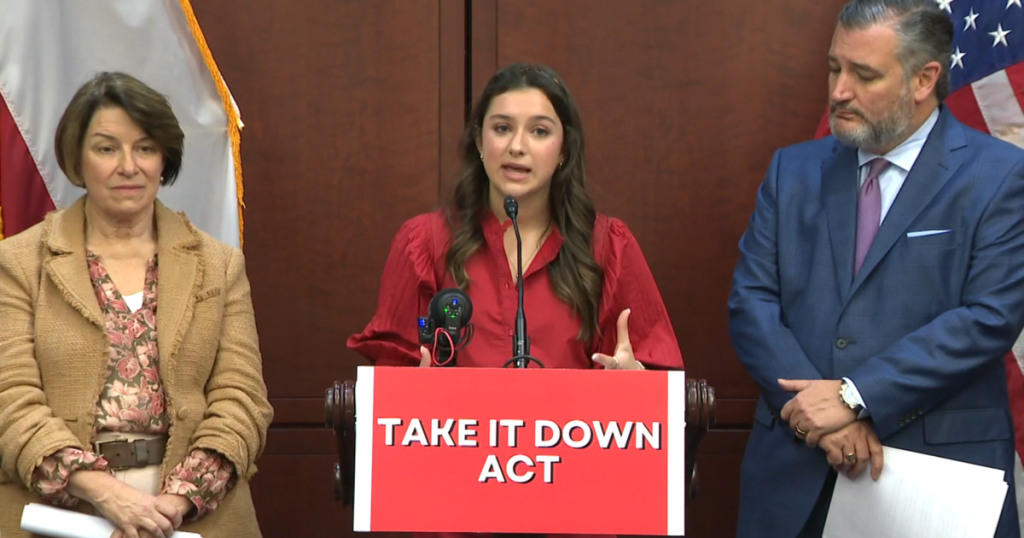

On a national level, bipartisan legislation is gaining traction to address the spread of non-consensual, AI-generated pornography. The Take It Down Act, spearheaded by Senators Ted Cruz and Amy Klobuchar, aims to compel social media companies and websites to swiftly remove such content. This bill marks a crucial step in recognizing the responsibility of tech platforms to actively combat the dissemination of harmful material. By placing a legal obligation on these platforms, the act seeks to empower victims and prevent the further spread of these damaging images. The bill’s passage in the Senate and its inclusion in a larger funding bill pending House approval signifies growing recognition of the urgent need to address this issue.

Social media platforms, while acknowledging the gravity of the situation, face challenges in effectively monitoring and removing the vast amount of content uploaded daily. Snapchat, in a statement to CBS News, emphasized its zero-tolerance policy for such content and highlighted its mechanisms for reporting violations. However, the sheer volume of content and the speed at which it spreads often outpace the platforms’ ability to react, leaving victims feeling unheard and unprotected. The challenge lies in balancing the need to protect users with the principles of free speech and avoiding censorship, a complex and ongoing debate. Developing more robust and proactive measures to identify and remove this harmful content remains a crucial area of focus for these platforms.

The battle against deepfake pornography is a multifaceted one. It requires a concerted effort from legislators, law enforcement, tech companies, and individuals. Victims like Elliston Berry are bravely sharing their stories to raise awareness and advocate for stronger protections. Elliston’s focus on preventing similar experiences for others underscores the importance of education and awareness. Teaching young people about the potential dangers of online platforms and empowering them to report abuse are crucial steps in combating this pervasive form of online harassment. As technology continues to evolve, so too must the strategies for addressing its potential for misuse. The fight against deepfake pornography is a race against time, one that demands vigilance, innovation, and a commitment to protecting the dignity and safety of all individuals online.