Actor’s AI Avatar Exploited for Venezuelan Propaganda: A Case Study in the Urgent Need for AI Regulation

The rapid advancement of artificial intelligence (AI) has opened up exciting new frontiers in the entertainment industry, offering innovative tools for content creation and performance. However, this technological leap has outpaced the development of adequate legal frameworks, leaving performers vulnerable to exploitation and misuse of their digital likenesses. The story of Dan Dewhirst, an Equity member and experienced actor, serves as a stark illustration of the potential pitfalls of unregulated AI in the entertainment world and highlights the urgent need for protective legislation.

Dan’s journey into the world of AI began with a seemingly promising opportunity. In 2021, he was offered a contract to become a "performance avatar" powered by AI. While intrigued by the cutting-edge technology, Dan also harbored concerns about the lack of safeguards and the broad scope of the contract’s clauses. His apprehension stemmed from the absence of limitations on the use of his avatar, particularly concerning potentially harmful or misleading applications, which are typically included in contracts for stock video and photography work. Despite assurances from his agent and Synthesia, the company behind the avatar project, that his digital likeness would not be used for illegal or unsavory purposes, the contract itself lacked these crucial protections.

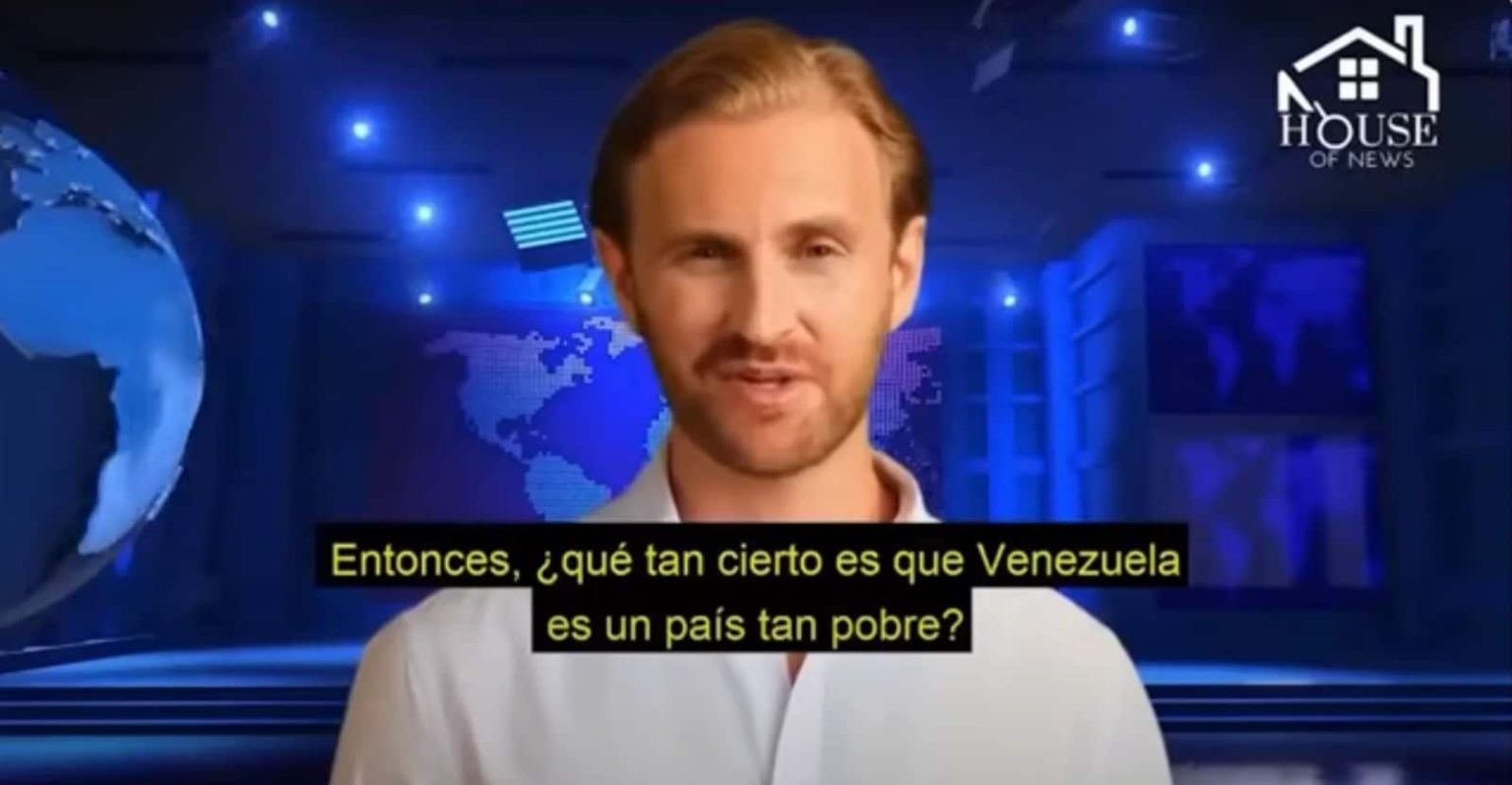

Driven by financial pressures in the post-pandemic entertainment landscape, Dan proceeded with the project, albeit with lingering unease. The creation of his avatar involved a meticulous process of capturing his facial expressions, mannerisms, and voice, effectively building a digital replica of his performance capabilities. Initially, Dan’s avatar was used for relatively innocuous corporate presentations and B2B content. However, the situation took a dramatic turn when, in April 2023, a friend alerted Dan to a CNN report exposing his avatar’s involvement in a Venezuelan propaganda campaign. The report revealed that Dan’s digital likeness was being used as a news anchor, disseminating misinformation and bolstering the narrative of the Venezuelan government.

The revelation had a devastating impact on Dan, who felt betrayed and violated by the misuse of his image. His worst fears had materialized, and his avatar had become the unwitting face of fake news. The implications extended beyond personal distress, potentially damaging his professional reputation and associating him with a political agenda he did not endorse. Dan’s case underscores the critical need for clear legal boundaries and contractual protections in the realm of AI-generated performances. His experience serves as a warning signal for other performers venturing into this evolving landscape, highlighting the risks of inadequate contractual safeguards.

In response to this alarming situation, Equity, the actors’ union, has launched the "Stop AI Stealing the Show" campaign, advocating for government intervention and industry cooperation to establish robust legal protections for performers in the age of AI. The union stresses the importance of recognizing performers’ rights over their digital likenesses, voices, and movements, emphasizing that these should not be exploited without explicit consent and appropriate compensation. Equity’s Assistant Secretary, John Barclay, emphasizes that AI is not a futuristic concept but a present reality, impacting performers’ livelihoods and requiring immediate attention from lawmakers and industry stakeholders.

Dan’s case serves as a crucial case study, demonstrating the potential for AI to be weaponized against performers, damaging their reputations and undermining their control over their own image. Equity’s campaign calls for a comprehensive legal framework that addresses the unique challenges posed by AI in the entertainment industry. This includes establishing clear ownership rights for performers’ digital likenesses, limitations on the use of AI-generated performances, and mechanisms for redress in cases of misuse or misrepresentation.

The core of Equity’s argument rests on the fundamental principle that performers, like other workers, deserve basic employment and human rights protections, regardless of the technological medium. The union emphasizes that companies cannot have unchecked authority to utilize and manipulate performers’ likenesses without appropriate safeguards. Dan’s experience demonstrates the potential for AI to be used for nefarious purposes, including the dissemination of propaganda and misinformation, highlighting the need for urgent action to protect performers and uphold ethical standards in the rapidly evolving landscape of AI-driven entertainment. The future of AI in the entertainment industry hinges on the development of a legal framework that balances technological innovation with the rights and protections of performers, ensuring that their contributions are valued and safeguarded.