The Looming Shadow of AI: Cyber Threats and Disinformation Surge in South Korea

South Korea finds itself grappling with a burgeoning threat landscape where the very technologies promising progress – artificial intelligence (AI) – are being weaponized for malicious purposes. Cyberattacks leveraging AI’s capabilities are on the rise, presenting unprecedented challenges to national security, economic stability, and the very fabric of societal trust. From sophisticated phishing campaigns to the automated spread of disinformation, the misuse of AI is amplifying existing threats and creating entirely new vulnerabilities within the digital sphere. This rapid evolution of cyber threats requires immediate attention and a comprehensive, multi-pronged approach to safeguarding South Korea against the potentially devastating consequences.

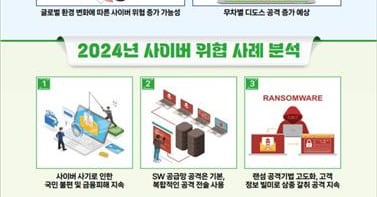

One of the most pressing concerns is the increasing sophistication of AI-powered cyberattacks. Traditional methods of intrusion detection and prevention struggle to keep pace with the adaptive nature of these attacks. AI algorithms can analyze vast amounts of data to identify system vulnerabilities, craft highly personalized phishing lures, and even autonomously develop new malware strains. This level of automation and adaptability renders conventional security measures less effective, necessitating a paradigm shift towards AI-driven defense mechanisms. South Korea must invest heavily in developing its own AI-powered security tools to counter these evolving threats and strengthen its cyber resilience. Moreover, fostering collaboration with international partners and sharing threat intelligence will be crucial in staying ahead of malicious actors leveraging AI for nefarious purposes.

Adding to the complexity of the situation is the escalating risk of AI-generated disinformation, commonly referred to as "fake news." The ability of AI to create highly realistic deepfakes – manipulated videos and audio recordings – poses a significant threat to public trust and can be exploited to manipulate public opinion, spread propaganda, and incite social unrest. Furthermore, AI-powered bots can automate the dissemination of false information across social media platforms, amplifying its reach and making it increasingly difficult for citizens to discern truth from fabricated narratives. This erosion of trust in information sources can have severe implications for democratic processes and social cohesion. Combating this "infodemic" requires a multi-faceted strategy encompassing media literacy initiatives, advanced detection technologies for deepfakes and other manipulated media, and potentially even legislative frameworks to regulate the malicious use of AI-generated content.

The implications of these emerging cyber threats extend far beyond the digital realm, impacting critical infrastructure, financial institutions, and even political processes. AI-powered attacks can target essential services, such as power grids and healthcare systems, causing widespread disruption and potentially jeopardizing public safety. The financial sector is particularly vulnerable to AI-driven fraud and manipulation, with sophisticated algorithms capable of circumventing traditional security measures and exploiting market vulnerabilities. Moreover, the potential for AI to be used to interfere in elections through the spread of disinformation and the manipulation of voter sentiment poses a serious threat to democratic integrity. Addressing these multifaceted risks requires a comprehensive approach involving both technological advancements and policy reforms.

South Korea must prioritize the development of a national AI security strategy that encompasses robust cyber defenses, proactive measures against disinformation, and a legal framework that addresses the ethical and security implications of AI technology. This strategy should involve collaboration between government agencies, private sector companies, research institutions, and international partners. Investing in advanced AI research and development, specifically focused on security applications, is essential to staying ahead of evolving threats. Furthermore, promoting public awareness about the risks of AI misuse and empowering citizens with the tools to identify and combat disinformation will be crucial in building a resilient society. Establishing clear ethical guidelines and regulations for AI development and deployment is equally important to ensuring that this powerful technology is used responsibly and for the benefit of society.

The increasing convergence of cyber threats and disinformation campaigns powered by AI presents a profound challenge for South Korea. Addressing this complex threat landscape requires a holistic approach encompassing technological advancements, policy reforms, international cooperation, and public awareness campaigns. By prioritizing cybersecurity and investing in the development of AI-driven defense mechanisms, South Korea can mitigate the risks posed by these emerging threats and ensure the continued safety, security, and prosperity of its citizens in the digital age. This proactive and multi-faceted approach is not merely a matter of technological preparedness but a vital necessity for safeguarding the nation’s democratic values, economic stability, and societal well-being in an increasingly interconnected and AI-driven world. The challenge is substantial, but by acting decisively and collaboratively, South Korea can effectively navigate this new era of technological advancement and mitigate the risks while harnessing the transformative power of AI for good.