The Rise of AI-Generated Misinformation: Exploiting Tragedy for Clicks

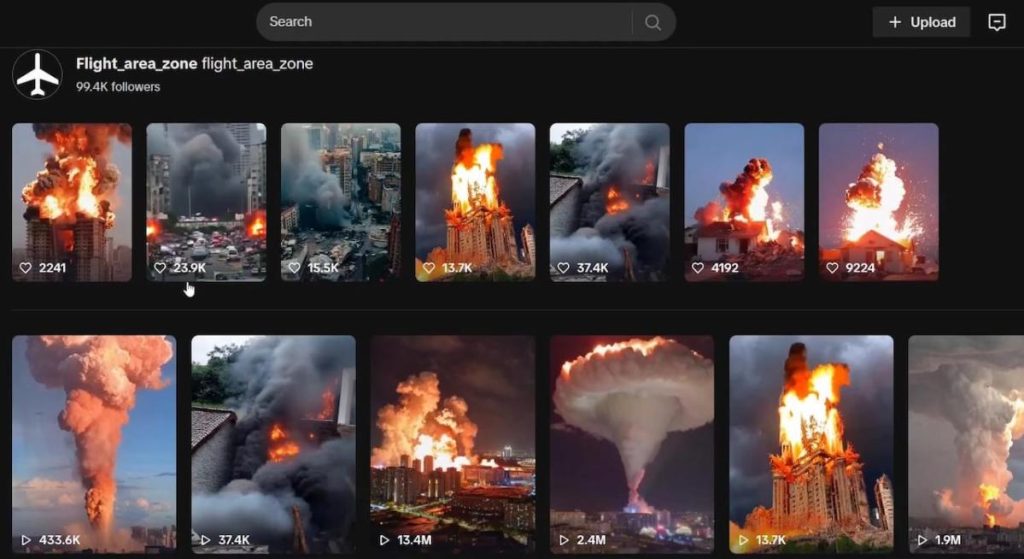

The blurred lines between reality and fabrication in the digital age have become increasingly precarious with the rise of sophisticated AI tools capable of generating realistic yet entirely synthetic content. A recent case involving a TikTok account, flight_area_zone, exemplifies this concerning trend. The account, now deactivated, hosted numerous AI-generated videos depicting explosions and burning cityscapes, amassing millions of views. These fabricated scenes, devoid of any disclaimers mandated by TikTok’s guidelines, were subsequently shared across various social media platforms with false claims that they represented actual footage from the war in Ukraine. This incident underscores the growing threat of AI-generated misinformation, often dubbed "AI slop," and its potential to manipulate public perception of real-world events.

AI Slop: A New Frontier in Misinformation

AI slop, encompassing low-quality, sensationalized, or sentimental AI-generated content, has become a pervasive force in the online landscape. Designed to maximize engagement and clicks, this type of content often exploits trending topics and tragic events, as seen with the flight_area_zone videos. Research indicates that AI-generated misinformation is rapidly gaining traction, rivaling the spread of traditionally manipulated media. This alarming trend highlights the ease with which AI can be weaponized to distort narratives and mislead audiences. The lack of critical evaluation by many consumers of online content further exacerbates the problem.

Exploiting the Information Vacuum: The Case of the Beirut Explosion Video

The flight_area_zone incident is not an isolated case. A similar instance occurred with a video depicting a fiery explosion in Beirut, falsely attributed to an Israeli strike on Lebanon. The AI-generated footage spread rapidly across social media, even shared by prominent accounts, further blurring the lines between authentic news and fabricated content. In some instances, the fake video was interspersed with actual footage of fires in Beirut, creating a confusing and misleading narrative for viewers. This incident highlights the danger of AI-generated misinformation polluting the information ecosystem, particularly during times of crisis or conflict.

Profiting from Deception: Monetization of AI Slop

The proliferation of AI slop is not solely driven by malicious intent; it can also be a lucrative enterprise. The now-deleted flight_area_zone account, like many others, offered a subscription service, allowing users to pay for exclusive badges and stickers, demonstrating how creators can monetize engagement generated by fabricated content. This financial incentive further fuels the production and dissemination of AI slop, creating a feedback loop that rewards misleading and sensationalized content.

Identifying the Fakes: Telltale Signs of AI-Generated Content

Despite the increasing sophistication of AI-generated visuals, telltale signs can often reveal their artificial origins. In the flight_area_zone videos, distorted elements such as warped cars, sped-up movements, and oversized vehicles betrayed their synthetic nature. Repetitive audio across multiple videos also indicated artificial generation. However, these imperfections often go unnoticed by viewers who may not possess the skills or inclination to critically examine online content. This underscores the need for increased media literacy and critical thinking skills to navigate the increasingly complex online information landscape.

Combating the Deluge: Shared Responsibility and Platform Accountability

Addressing the growing threat of AI-generated misinformation requires a multi-pronged approach. Social media platforms bear the responsibility of implementing stricter content moderation policies, including mandatory labeling of AI-generated content and swift removal of misinformation. Tools such as digital watermarking could help identify and track AI-generated content. However, platform moderation alone is insufficient. Users must also cultivate critical thinking skills and exercise caution when consuming online content. Educational initiatives promoting media literacy are crucial in empowering individuals to discern fact from fiction in the digital age. This shared responsibility between platforms and users is essential to curb the spread of AI-generated misinformation and maintain a healthy information ecosystem.