PRISA Media Develops VerificAudio to Combat Audio Deepfakes in Spanish

The rise of generative AI has brought about remarkable advancements in various fields, but it has also opened doors to new forms of misinformation, particularly through deepfakes. Audio deepfakes, created using sophisticated voice cloning technologies, pose a significant threat to the integrity of news and public discourse. PRISA Media, the largest audio producer in Spanish worldwide, recognized this emerging challenge and embarked on a mission to develop a tool that would empower journalists to detect and verify audio deepfakes. The result is VerificAudio, an experimental tool designed to bolster trust in news and combat the spread of misinformation in the Spanish-speaking world.

The impetus for VerificAudio stemmed from PRISA Media’s early experiments with synthetic voices, which revealed the rapid advancement and accessibility of voice cloning technologies. These technologies, increasingly available as open-source software and online services, enable anyone to create convincing replicas of voices in mere minutes. This ease of access has led to the proliferation of fake audios on social media and messaging platforms, transitioning from satirical jokes to potentially damaging misinformation campaigns. Recognizing the potential for misuse during elections and other critical events, PRISA Media aimed to develop a solution rapidly.

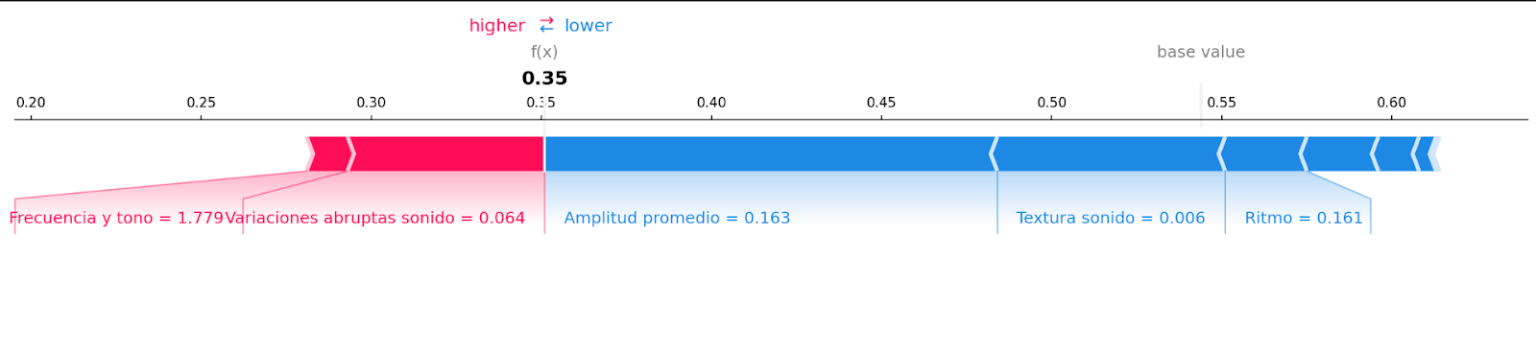

Supported by the Google News Initiative, PRISA Media collaborated with Minsait, a Spanish tech company, and its AI unit, Plaiground, to develop VerificAudio. The project focused on creating an audio fact-checking platform that leverages natural language processing (NLP) and deep learning to evaluate audio manipulations. A crucial initial step involved preparing and normalizing audio files to improve data quality and reduce unwanted variability that could hinder the model training process. This involved noise reduction, format conversion, volume equalization, and temporal cropping. Following data preparation, two complementary approaches were employed: neural networks and a machine learning-based model. The machine learning model analyzed audio features, such as pitch and intonation, to identify potential manipulations. Explainability graphs were incorporated to provide insights into the model’s decision-making process. The neural network approach, adapted for Spanish, compared audio files to determine whether they originated from the same speaker, different speakers, or a synthetic process. By combining these two models, VerificAudio achieved a higher degree of accuracy and minimized false results. An online interface was developed to simplify usage for non-technical personnel.

One of the major challenges encountered during development was the scarcity of readily available deepfake audio samples in Spanish. To overcome this, the team generated its own dataset by utilizing various voice cloning technologies and mimicking real fake audios found online. Caracol Radio, PRISA Media’s radio unit in Colombia, played a crucial role in this process, contributing audio samples from their archives and generating new ones. This ongoing project demands continuous refinement of the models to adapt to evolving cloning technologies and new methods of creating fake audios. Additionally, the team addressed the issue of “black box” AI by incorporating the machine learning model’s explainability feature, providing greater transparency into the system’s decision-making process.

The validation of VerificAudio involved a dual approach, combining automated testing with human judgment. A new dataset of real and fake audios was compiled to evaluate both models. This approach not only identified inaccuracies but also provided valuable feedback for retraining and refining the models, improving the user interface, and developing a probability-based approach to presenting results. Recognizing the difficulty of providing definitive results, VerificAudio offers a percentage indicating the likelihood of an audio being real or fake, aiding journalists in their fact-checking endeavors.

VerificAudio is now accessible to verification teams across PRISA Media’s radio stations in Spain, Colombia, Mexico, and Chile. The tool is integrated into established fact-checking protocols, where journalists submit suspicious audio files for analysis. The verification team then evaluates the file’s origin, distribution channels, news context, and other relevant factors, along with the AI analysis provided by VerificAudio. PRISA Media is creating two repositories: one containing verified audio files of well-known figures to expedite the comparison process, and another containing fake audio files to continuously train and improve the models. VerificAudio’s user-friendly interface offers two modes of verification: comparative mode, which determines whether audio files belong to the same speaker, different speakers, or a cloned voice, and identification mode, which assesses the likelihood of an audio being real or synthetic.

The development of VerificAudio is an ongoing process, with continuous improvements planned as new technologies emerge. PRISA Media aims to expand the diversity of the dataset to encompass various Spanish accents and potentially extend the tool’s capabilities to other languages spoken in Spain. Furthermore, they are developing an online platform to provide broader access to the tool and offer educational resources about deepfakes to the public. While currently an internal resource, PRISA Media envisions the platform playing a significant role in the audio deepfake verification landscape, with the potential for future access by other media organizations.