The Shadow of Misinformation: Social Media’s Role in a Polarized World

The proliferation of misinformation online, amplified by the architecture of social media platforms, has emerged as a significant concern in recent years. Studies, polls, and expert analyses paint a complex picture of how these platforms contribute to the spread of false and misleading information, influencing public discourse, eroding trust in institutions, and potentially even contributing to political polarization. While the scale and impact of this phenomenon are still debated, the available evidence suggests a pressing need to understand and address the challenges posed by misinformation in the digital age.

A key issue highlighted by researchers is the inherent virality of misinformation. Studies suggest that false information spreads faster and wider than factual information on social media. This is attributed to various factors, including the algorithmic design of platforms that prioritize engagement, the emotional nature of much misinformation, and the tendency of users to share content that aligns with their existing beliefs. This creates "echo chambers" where misinformation is reinforced, and corrective information struggles to gain traction. The situation is further complicated by the ease with which manipulated content, such as "deepfakes," can be created and disseminated, adding another layer of difficulty to the already complex task of discerning truth from falsehood online.

While the narrative of social media as the primary driver of societal division is widespread, recent research offers a more nuanced perspective. While platforms undoubtedly contribute to the spread of misinformation, their impact on individual beliefs and political behavior might be less direct and potent than often assumed. Studies indicate that exposure to problematic content represents a small fraction of overall news consumption for most people. Furthermore, the effects of such exposure appear to be short-lived, requiring repeated exposure for any lasting impact. This suggests that pre-existing attitudes and beliefs play a significant role in how individuals process and react to information encountered online. Affective polarization, the tendency to view opposing political groups with negativity, often precedes and predicts media consumption patterns, rather than being solely a consequence of online exposure.

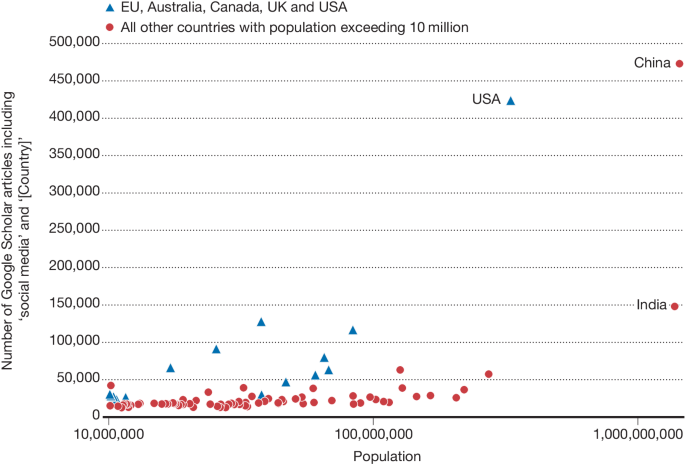

The challenge of combating misinformation is further complicated by the global nature of social media. Platforms operate across diverse linguistic and cultural contexts, making effective content moderation a formidable task. Resource constraints, coupled with the sheer volume of content generated daily, pose significant hurdles for platforms seeking to identify and remove harmful material. This is particularly acute in the Global South, where limited digital literacy and the prevalence of low-resource languages exacerbate the problem. Furthermore, the interplay between political and economic interests often hinders efforts to regulate platforms, as evidenced by instances where companies have prioritized business considerations over combating misinformation.

Despite the complexities, ongoing research explores various approaches to mitigate the spread of misinformation. Fact-checking initiatives, media literacy programs, and algorithmic adjustments aimed at promoting authoritative sources are among the strategies being tested. However, the effectiveness of these interventions remains a subject of ongoing investigation. Some research suggests that fact-checking can be effective in correcting specific false claims, but its broader impact on overall belief systems is less clear. Moreover, efforts to de-platform harmful actors or content have shown mixed results, with some evidence suggesting that such actions simply displace problematic activity to other platforms.

Moving forward, a multi-faceted approach is likely required to address the challenges posed by misinformation online. This includes fostering greater collaboration between researchers, policymakers, and social media companies, promoting media literacy and critical thinking skills among users, and developing more robust methods for identifying and countering the spread of false information. Further research is crucial to gain a deeper understanding of the complex interplay between individual psychology, platform design, and societal factors that contribute to the spread and impact of misinformation. Only through such a comprehensive approach can we hope to mitigate the negative consequences of misinformation and foster a more informed and resilient digital public sphere.