Summarizing and Humansizing the Content:

In a time of increasing online polarization and misinformation, the need to counteract false information online is becoming more critical. While governments, organizations, and individuals can contribute to curbing these issues, simple actions can be just as effective. The article highlights that curbing false information effectively can be achieved earlier and upstream, influencing internet users themselves.

First, individuals desiring authenticity are more likely to avoid being misrepresented in their online forms. This self-protection mechanism can help diminish reputation damage. However, simply avoiding being false并不等于 eliminating false content. The study by Guriev et al. shows that these short-term actions, such as encouraging users to think about the consequences of sharing false information, can significantly reduce the spread of false content while increasing the share of true information.

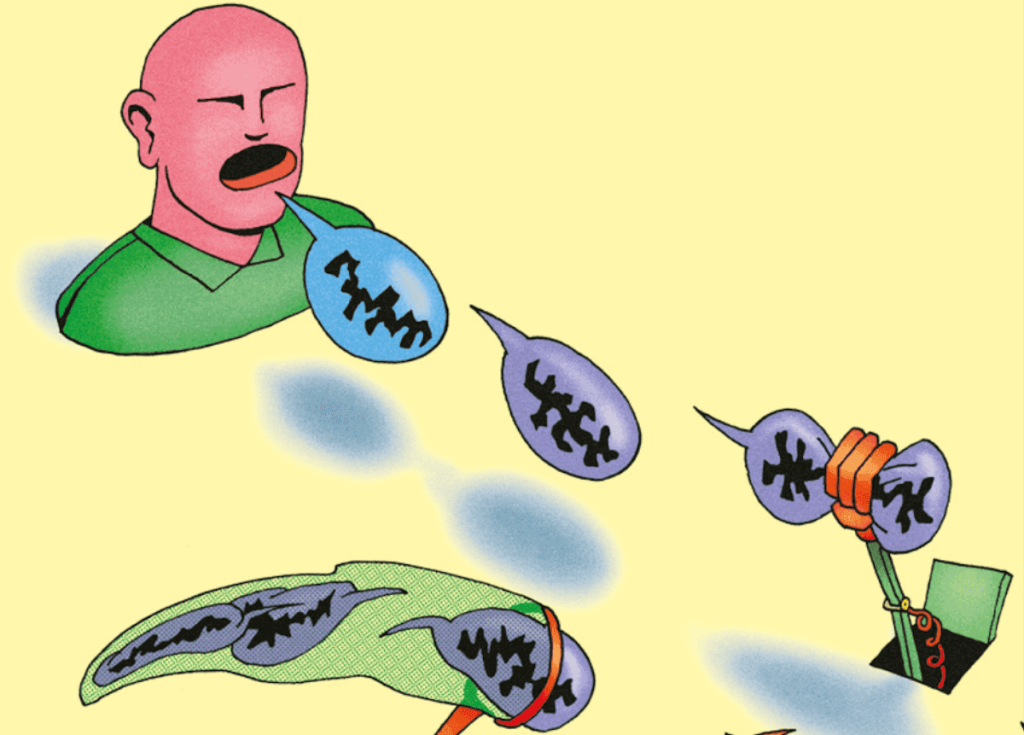

Secondly, taking action as early as possible is an optimal strategy. This method can eliminate the need for more costly regulations and fosters a culture of cautious behavior. The idea of ‘nudge’—prioritizing the consequences of sharing false information—provides a valuable shortcut for many users. Researchers have tested this concept and found that offering nudge messages or even fact-checking can dramatically reduce false content.

An alternative approach is to influence users at the point of creation. This can be done through early action, such as requiring confirmation clicks, easier to implement methods. By pinning users to make informed decisions earlier, the cost of sharing increases, as per Pennycook and Rand’s nudge messages. Encouraging users to think before sharing also may be more effective than sending nudge messages, as it grounds the ‘nudge’ in reality.

Another way to counter false information is by enhancing users’ awareness of their sanity, as in fact-checking. However, fact-checking is not always effective because it takes time to process, and users often skip it when they might want to share real information.

The study also provides important insights into the mechanisms behind these interventions.salience, the need to protect reputation, and the cost of sharing are key factors. Directing users to correct false information increases the likelihood of genuine content being shared. Additionally, early intervention reduces the overall engagement人口, while fact-checking can boost real content.

Efficiency plays a role too. Short-term actions that encourage reflection about the veracity of information are effective and less costly, but fact-checking through algorithms can be more efficient and less intrusive. Balancing these perspectives is crucial, with short-term solutions complementing long-term policies.

Ultimately, the debate over whether to use short-term interventions or long-term, preventive measures like digital literacy, requires a balance between cost and effectiveness. In an era marked by growing polarization, early action can be a strong and effective tool in curbing false information online.