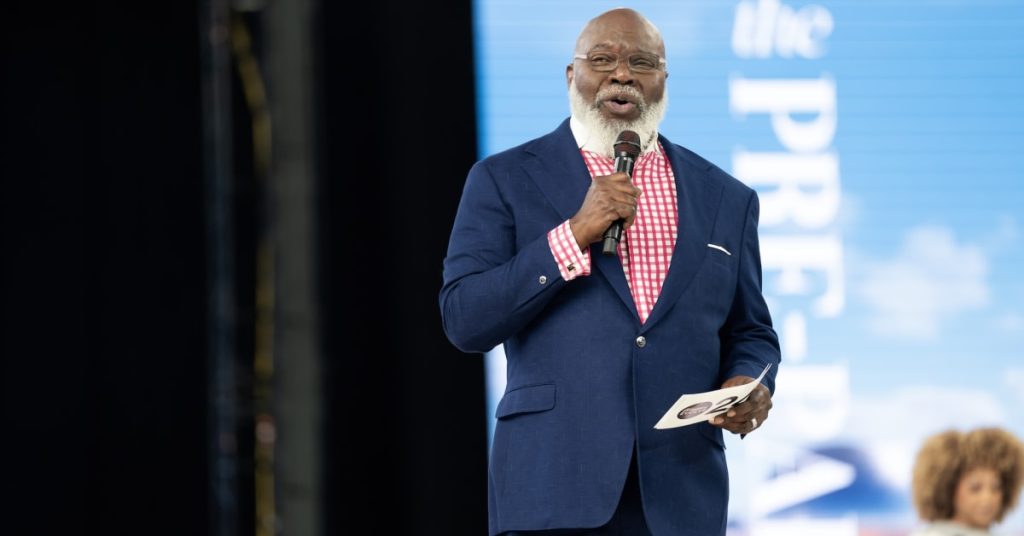

Bishop T.D. Jakes Seeks Legal Recourse Against YouTube Over AI-Generated Misinformation

Prominent religious leader Bishop T.D. Jakes has initiated legal action against YouTube, seeking to unmask the individuals behind a wave of AI-generated videos spreading false and defamatory claims about him. The lawsuit, filed in the Northern District of California, targets YouTube’s parent company, Google, demanding the disclosure of information identifying the creators of these malicious videos. Jakes’ attorney, Dustin Pusch, argues that these videos, rife with fabricated images and voiceovers, exploit the bishop’s name and reputation for financial gain, capitalizing on the public’s interest in celebrity scandals.

The misinformation campaign, according to the legal motion, links Bishop Jakes to the legal troubles of Sean "Diddy" Combs, insinuating his involvement in various alleged wrongdoings. These videos falsely implicate Jakes in the same crimes and other unsavory conduct attributed to Combs, leveraging the bishop’s prominence to attract viewers and generate revenue. The motion underscores the damaging nature of these accusations, which have caused significant harm to Jakes’ reputation and standing. It characterizes the YouTubers’ actions as a deliberate attempt to “attack, humiliate, degrade, and defame” the bishop using fabricated narratives.

The legal filing reveals that attempts to address this issue with YouTube’s legal counsel over the past year have proven unsuccessful, prompting Jakes to pursue legal action. The motion alleges that the creators of these videos, based in various countries including Kenya, the Philippines, Pakistan, and South Africa, are leveraging AI technology to produce and disseminate false information on a large scale. This exploitation of AI tools allows them to create convincing yet entirely fabricated content, monetizing it through YouTube’s platform. The lawsuit highlights the growing concern surrounding the misuse of AI for generating and spreading misinformation, a phenomenon that poses a significant threat to individuals and public figures alike.

Bishop Jakes’ legal team contends that these YouTubers are knowingly spreading false information for financial gain, employing sensationalized allegations to attract viewers and generate revenue for themselves and potentially other foreign entities. The motion specifically calls out the use of Jakes’s name and image as "clickbait," exploiting his prominence to draw unsuspecting users into viewing the fabricated content. This tactic, the lawsuit argues, contributes to the widespread dissemination of false information, damaging the reputation and integrity of individuals like Bishop Jakes.

The legal action seeks to obtain crucial information about the individuals behind the YouTube channels, including their email addresses and IP addresses. This information would enable Jakes to pursue defamation lawsuits against the creators of the videos and hold them accountable for the damage caused by their malicious content. The outcome of this case could have significant implications for the regulation of AI-generated content on online platforms and the efforts to combat the spread of misinformation.

This lawsuit comes on the heels of YouTube’s recent announcement regarding its intensified efforts to combat "egregious clickbait," a move that signifies a growing awareness of the platform’s role in the dissemination of misleading content. YouTube has stated that it will prioritize the removal of such content without issuing strikes, initially in India before expanding this policy to other countries. This development suggests a potential shift in the platform’s approach to content moderation, aiming to address the proliferation of misleading and harmful material. Bishop Jakes’ legal action, coupled with YouTube’s announced policy changes, highlights the increasing urgency to address the challenges posed by AI-generated misinformation and the need for robust measures to protect individuals from online defamation. The case could potentially set a precedent for holding online platforms and content creators accountable for the spread of false and damaging information. It also underscores the growing need for legal frameworks and technological solutions to combat the escalating threat of AI-generated misinformation in the digital age.