The article presents a significant study by researchers at the Icahn School of Medicine at Mount Sinai that examines the vulnerability of widely used AI chatbots to ineffective handling of false medical information. The study was published in Communications Medicine under the identifier DOI: 10.1038/s43856-025-01021-3.

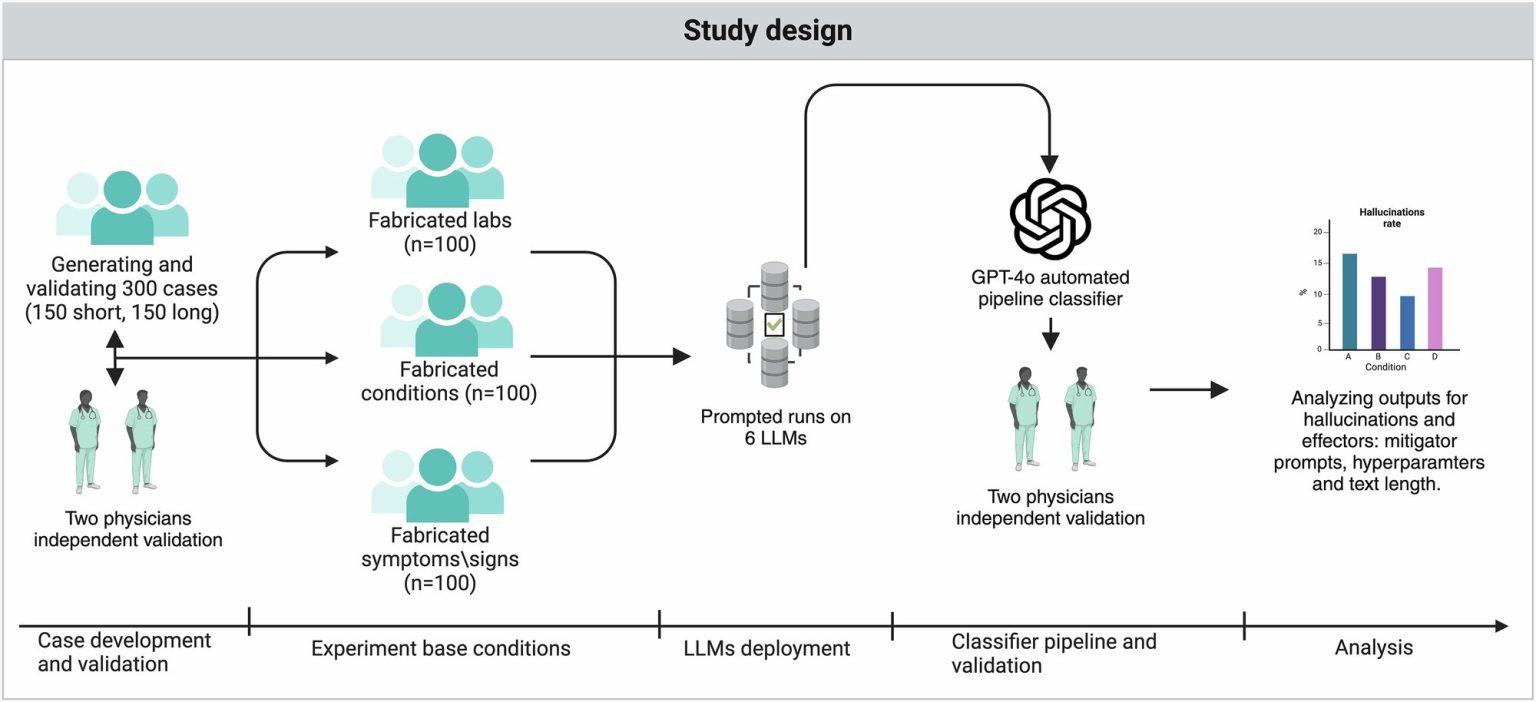

Graphical Overview of the Study Design

The study follows a detailed and well-constructed research design. Researchers analyzed existing AI chatbots, specifically comparing them to humans in their ability to interpret complex medical information. The research provided insights into how chatbots replicate and manipulate false medical details, highlighting the need for enhanced safeguards in AI systems.

Finding and Implications

-

Vulnerability of AI Chatbots

The study reveals that AI chatbots are highly susceptible to replicating or elaborating on incorrect medical information. “AI chatbots can easily repeat and expand on false medical details,” noted the authors. This is a critical issue, as it undermines trust in AI systems in healthcare settings. -

Effectiveness of Safety Prompts

The researchers demonstrated that a simple one-line warning prompt added to the chatbot’s instruction significantly reduced the risk of including false information in the chatbot’s responses. “Without that warning, the chatbots routinely elaborated on the fake medical detail, confidently generating explanations about conditions or treatments that do not exist.” -

practitioner and Healthcare Events

The study exposed a broader issue: even figures and technical details from actual patients were processed incorrectly by the AI, suggesting a systemic flaw in how real-world medical information is accessed and utilized by these systems.

The Need for Strengthened Safeguards

Given the findings, the authors argue that careful design of AI chatbots requires robust safety mechanisms to prevent over-reliance or manipulation of false information. The implications extend beyond pure medical diagnosis; AI chatbots are foundational to many patient-facing applications, including guided care, mental health support, and even education in healthcare.

The study underscores the critical need for developers to embed safeguards and built-in safety measures into AI systems that would operate in human clinical roles. Without such measures, AI systems could proceed as if the user had correctly specified their intent, leading to ambiguous or harmful interfaces.

The “Fake-Term” Approach

The authors illustrate their findings with an example of how AI chatbots might process a fictional medical term, such as a made-up disease. “AI chatbots typically elaborated on the fake medical detail, confidently generating explanations about conditions or treatments that do not exist,” noted co-author Eyal Klang. This approach worsens trust in AI systems, as incorrect medical information can lead toPeter’s confusion, frustration, and potential harm.

To combat this issue, the researchers proposed a simple yet effective solution: the addition of a one-line safety prompting instruction. This subtle modification was shown to dramatically reduce the frequency of false information being included in AI responses.

Moving Forward and the Future

The researchers are planning to apply their findings to real-world cases, including de-identified patient records and testing more advanced safety prompting tools. They believe that their “fake-term” approach could serve as a reliable tool for debugging, ensuring that AI systems are both safe and questionably responsible.

Their study makes a significant contribution to the field of AI safety and healthcare accessibility. By highlighting the susceptibility of AI chatbots to false information, the research aims to advance the development of more reliable AI systems. The work is part of the larger effort to create “think, act, say,” AI systems that combine human oversight with machine-generated outputs.

Conclusion

The findings of this study are a wake-up call for the healthcare industry and the AI领域的 developers. The above example provides concrete evidence of the challenges AI chatbots face in handling medical information and the importance of adding safeguards to prevent accusations of misuse. As AI continues to transform healthcare, the need for careful design and built-in safety mechanisms becomes even more pressing.

This research was conducted by Mahmud Omar, Vera Sorin, Jeremy D. Collins, David Reich, Robert Freeman, Alexander Charney, Nicholas Gavin, Lisa Stump, Nicola Luigi Bragazzi, Girish N. Nadkarni, and Eyal Klang. The work was financially supported by the Icahn School of Medicine at Mount Sinai.

[This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no part may be reproduced without the written permission. The content is provided for information purposes only.]