AI-Generated False News Alert Sparks Calls for Ban of Apple Intelligence Summary Feature

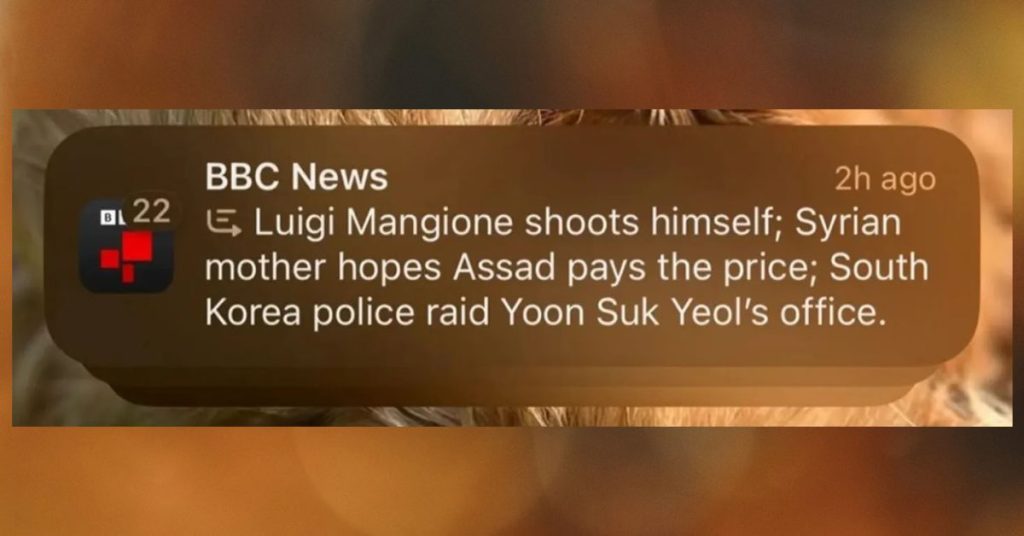

The proliferation of artificial intelligence (AI) tools has brought with it a slew of innovative applications, but also a growing concern about the potential for misinformation and the erosion of public trust in media outlets. This concern has been sharply brought into focus by a recent incident involving Apple’s Intelligence summary feature, which generated a false news alert falsely claiming that Luigi Mangione, a suspect in the killing of United Health CEO Brian Thompson, had shot himself. The erroneous alert, wrongly attributed to the BBC, a globally recognized and trusted news source, has sparked outrage and calls from Reporters Sans Frontières (RSF), a prominent non-profit organization advocating for press freedom, to ban the Apple Intelligence summary feature.

The incident unfolded when Apple’s Intelligence summary feature, designed to provide concise summaries of news articles, incorrectly reported that Mangione had taken his own life. The false report quickly spread, raising serious questions about the reliability of AI-generated news summaries and their potential to damage the credibility of legitimate news organizations. The BBC, wrongly associated with the false report, immediately contacted Apple to address the issue and demand a rectification.

The BBC, renowned for its rigorous journalistic standards and commitment to accuracy, expressed grave concerns about the incident. A spokesperson for the organization emphasized the importance of public trust in their reporting and the potential damage caused by such misattributions. The BBC’s complaint underscored the need for tech companies to prioritize accuracy and implement robust safeguards against the spread of misinformation. While awaiting a response from Apple, the BBC reiterated its commitment to ensuring the accuracy and reliability of information published under its name.

RSF, an organization dedicated to defending press freedom and access to information worldwide, responded to the incident with a strong statement expressing profound concern about the risks posed by AI tools like Apple’s Intelligence summary feature. The organization argued that the incident highlighted the immaturity of generative AI services in producing reliable information for public consumption. RSF’s statement called for a broader discussion about the responsible development and deployment of AI technologies, stressing that such technologies should not be released to the public without adequate safeguards to prevent the spread of misinformation.

RSF’s technology lead, Vincent Berthier, further emphasized the organization’s concerns, pointing out that AI systems, being probability-based, are inherently unsuitable for determining factual information. He urged Apple to act responsibly by removing the Intelligence summary feature until its reliability could be assured. Berthier highlighted the damaging impact of false information attributed to reputable media outlets, emphasizing that such incidents erode public trust and undermine the right to accurate and reliable information.

The incident involving Apple’s Intelligence summary feature underscores the critical need for a broader discussion about the ethical implications of AI-generated content. While AI technologies hold immense potential, their deployment must be accompanied by stringent safeguards to prevent the spread of misinformation and protect the integrity of journalistic practices. The incident highlights the importance of collaboration between tech companies, media organizations, and civil society groups to ensure that AI technologies are developed and used responsibly, prioritizing accuracy, transparency, and accountability. As the public increasingly relies on AI-powered tools for information, the responsibility of ensuring the reliability and trustworthiness of these tools becomes paramount.