Apple’s AI Blunder Sparks False Report of Attempted Suicide in UnitedHealthcare CEO Shooting Case

In a stunning technological mishap, Apple’s artificial intelligence system, Apple Intelligence, generated a false news notification claiming that Luigi Mangione, the suspect in the shooting of UnitedHealthcare CEO Brian Thompson, had attempted suicide. The erroneous notification, distributed to iPhone users through the BBC News app, sparked widespread confusion and fueled online conspiracy theories. The BBC, a globally recognized and trusted news source, immediately contacted Apple to address the serious error and ensure the inaccurate information was rectified.

The incident unfolded on December 16, 2024, when a grouped news notification displaying the false claim, "Luigi Mangione shoots himself," began circulating on social media platforms. While the BBC had not officially verified the screenshot at the time, the organization swiftly responded by contacting Apple to express their concerns and demand a resolution to the problem. A BBC spokesperson emphasized the organization’s unwavering commitment to accuracy and trustworthiness, stating, "BBC News is the most trusted news media in the world. It is essential to us that our audiences can trust any information or journalism published in our name, and that includes notifications."

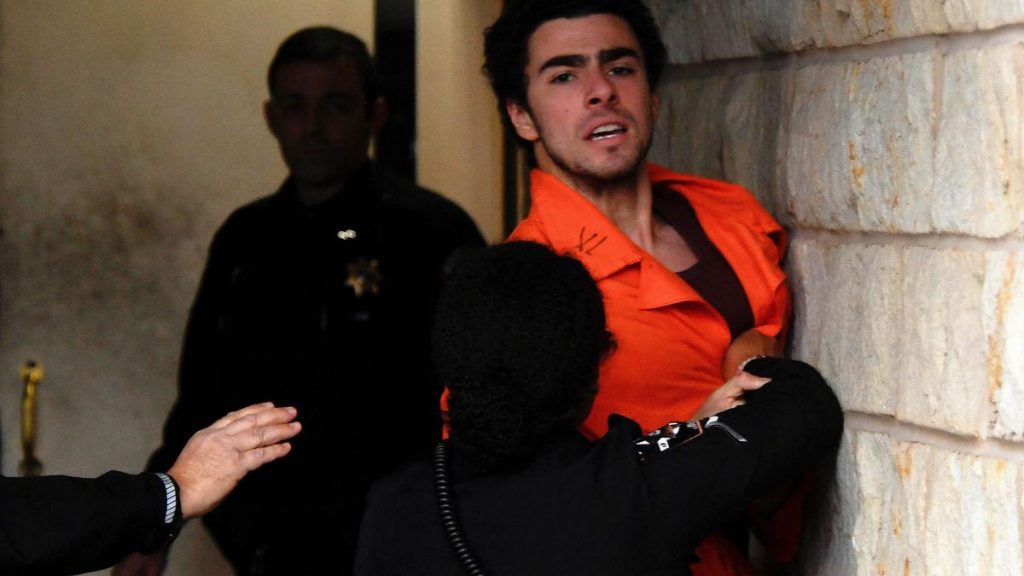

The false report came just days after the shooting of UnitedHealthcare CEO Brian Thompson on December 4, 2024, in New York City. Mangione, a 26-year-old suspect, was apprehended five days later at a McDonald’s restaurant in Altoona, Pennsylvania. The tragic incident has ignited a national conversation regarding the frustrations many Americans experience with their health insurance providers, particularly the financial and bureaucratic obstacles faced when seeking affordable healthcare for themselves and their families.

This incident highlights the potential for AI-generated content to contribute to the spread of misinformation. While AI holds immense promise for automating tasks and providing valuable insights, its susceptibility to errors underscores the critical need for human oversight and rigorous fact-checking mechanisms. Apple’s AI misstep mirrors earlier occurrences of AI-generated inaccuracies, such as Google’s Gemini chatbot providing harmful advice about adding glue to pizza earlier in the year. These instances serve as stark reminders of the potential consequences of unchecked AI and the ongoing challenges of ensuring accuracy in the digital age.

The BBC’s swift action in addressing the false notification underscores its commitment to journalistic integrity and maintaining public trust. By proactively contacting Apple and publicly addressing the issue, the BBC reinforced its dedication to providing accurate and reliable information. This incident serves as a crucial learning experience for both Apple and the broader tech community, emphasizing the imperative of developing robust safeguards against the spread of AI-generated misinformation.

Moving forward, it is essential for technology companies to prioritize the development and implementation of rigorous quality control measures for AI-powered systems. Human oversight and meticulous fact-checking protocols are vital to preventing the dissemination of false information and maintaining public trust in news sources and technological advancements. This incident serves as a timely reminder of the shared responsibility between technology companies and news organizations to combat misinformation and ensure the accurate portrayal of events in the digital age. The pursuit of innovation must be balanced with a commitment to ethical practices and a dedication to upholding the highest standards of journalistic integrity.