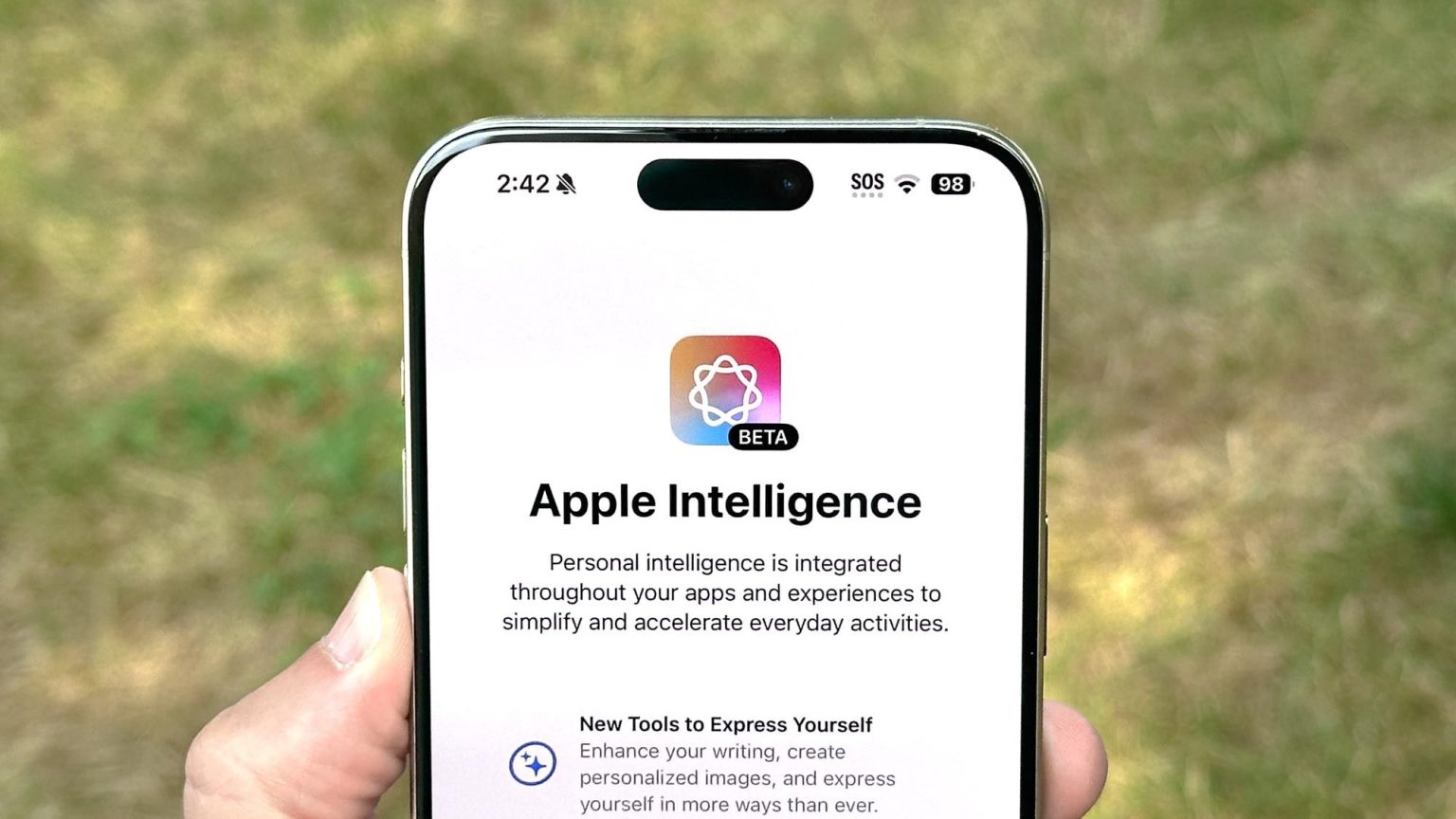

Apple Intelligence’s Hallucinations Spark Concerns Over AI Accuracy and Misinformation

Apple’s foray into AI-generated news summaries has hit a snag, with two recent incidents involving the BBC News app highlighting the potential for embarrassing errors and the spread of misinformation. The first incident falsely declared darts player Luke Littler the winner of the PDC World Championship before the final match had even taken place. The second incident compressed two errors into a concise, yet utterly inaccurate, nine-word summary: "Brazilian tennis player, Rafael Nadal, comes out as gay." This statement is riddled with inaccuracies: Nadal is Spanish, not Brazilian, and he had made no such announcement. The linked article actually focused on Brazilian tennis player Joao Lucas Reis da Silva and his unintentional rise as an LGBT figure after posting a birthday message to his boyfriend on Instagram. Neither Nadal nor Rafael were mentioned in the original piece, further deepening the mystery of the AI’s fabrication.

The BBC has expressed its frustration with these repeated errors, urging Apple to address the issue urgently. As a globally trusted news source, the BBC emphasizes the importance of accuracy in information attributed to them, including notifications generated by third-party services like Apple Intelligence. While Apple has yet to comment on the latest BBC report, their previous silence on similar incidents suggests a reluctance to publicly address the shortcomings of their AI technology. Apple CEO Tim Cook’s earlier statement acknowledging that Apple Intelligence’s results would fall "short of 100%" rings hollow in the context of news headlines, where accuracy is paramount. This repeated failure to deliver reliable information underscores the need for Apple to take decisive action to prevent further damage to both its reputation and the integrity of news dissemination.

These high-profile gaffes, while embarrassing for Apple, offer a valuable lesson about the limitations of current AI technology. The errors are easily detectable and publicly verifiable, which is not always the case with AI-generated content. Many AI hallucinations occur within personalized searches, unseen by anyone but the individual user. This is particularly concerning with search engines like Google, which increasingly rely on AI-generated summaries to answer user queries. With billions of searches conducted daily, the potential for misinformation to spread unnoticed is significant. The internet, once lauded as a democratizing force for education, risks becoming a vector for misinformation if AI-generated inaccuracies are not addressed.

Unlike personalized search results, the Apple Intelligence summaries are pushed to a wider audience through notifications, increasing the likelihood of multiple users encountering the same errors. This broader exposure makes it easier to debunk false information, particularly since the notifications are designed to encourage clicks. Users who tap on the notification are quickly confronted with the reality, discovering the truth behind the misleading headlines. This immediate feedback mechanism can help foster healthy skepticism towards AI-generated shortcuts and encourage users to verify information before accepting it as fact.

While the errors observed thus far have been relatively minor, their implications are significant. The potential for more serious consequences exists, especially if Apple Intelligence hallucinates about critical events like natural disasters or acts of violence. The spread of false information in such scenarios could lead to widespread panic and disruption. Each instance of misinformation erodes public trust in Apple Intelligence and undermines the company’s reputation. To mitigate further damage and prevent more serious mishaps, Apple should consider pausing the feature until more robust safeguards are implemented. A proactive approach is crucial to restore confidence in the technology and ensure the responsible deployment of AI in news dissemination.

The current situation underscores the urgent need for increased scrutiny and caution in the development and deployment of AI technologies. While Apple Intelligence’s errors have, so far, been confined to relatively inconsequential topics, they serve as a stark warning about the potential for more serious consequences. The possibility of AI hallucinations concerning critical events highlights the need for rigorous testing and robust safeguards to prevent the spread of misinformation. Apple’s response to these incidents will be crucial in determining the future trajectory of AI-powered news summarization and its impact on public trust in both technology and news sources. A commitment to accuracy, transparency, and user safety is paramount to ensure that AI serves as a tool for empowerment rather than a source of misinformation.