Apple’s AI Under Fire for Fabricating News Headlines, Raising Concerns About Misinformation

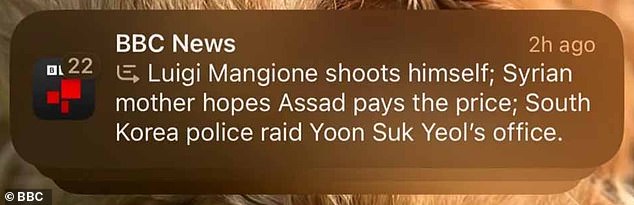

Apple’s foray into artificial intelligence has hit a snag, with its new feature, Apple Intelligence, facing accusations of generating false and misleading news summaries. The latest incident involves a complaint filed by the British Broadcasting Corporation (BBC) after the AI system falsely reported that Luigi Mangione, a 26-year-old Ivy League graduate accused of murdering UnitedHealth CEO Brian Thompson, had shot himself. The erroneous summary, presented as a notification to users, combined headlines from three purported BBC articles, creating a narrative that directly contradicted reality. Mangione is currently in custody in Pennsylvania awaiting extradition. This incident follows another case of misinformation where Apple Intelligence misrepresented a New York Times article about an arrest warrant issued for Israeli Prime Minister Benjamin Netanyahu, falsely claiming that he had been arrested. These inaccuracies highlight a growing concern surrounding the potential for AI-generated content to spread misinformation.

The BBC’s complaint underscores the gravity of the situation, emphasizing the importance of accuracy and trust in news reporting. A BBC spokesperson stressed the broadcaster’s commitment to ensuring the reliability of information published under its name, including notifications generated by third-party platforms. The spokesperson highlighted the BBC’s reputation as a trusted news source and the potential damage that false reporting could inflict upon its credibility. While Apple has not publicly addressed the BBC’s complaint, the incident has drawn attention to the broader issue of AI-generated misinformation and the need for robust safeguards against the spread of fabricated news.

The misleading summaries appear as part of Apple Intelligence’s notification feature, which aims to provide users with concise overviews of missed updates from various apps. However, the system’s tendency to combine unrelated headlines and misinterpret article content has resulted in a series of inaccurate and often nonsensical summaries. The incident involving Mangione is particularly alarming, as it falsely reported a significant development in a high-profile criminal case. The false report of Netanyahu’s arrest also demonstrates the potential for these inaccuracies to spread misinformation about politically sensitive topics. These cases raise questions about the adequacy of Apple’s testing and quality control processes for its AI system.

Beyond the BBC and New York Times incidents, numerous iPhone users have shared screenshots of similarly flawed notification summaries, further highlighting the widespread nature of the problem. Examples include summaries that combine unrelated news items, such as linking the consumption of salmon with the return of polar bears to Britain, demonstrating the system’s apparent inability to discern context and relevance. Furthermore, the AI extends its summarization capabilities to text messages, sometimes with equally alarming inaccuracies. One user reported a summary that drastically misrepresented a message from their mother, transforming a comment about a challenging hike into a false report of a suicide attempt.

These errors, while sometimes appearing comical, point to a serious underlying issue: the potential for AI-generated content to inadvertently spread misinformation. Experts warn that while the concept of automated summarization holds promise, the technology is not yet mature enough to reliably avoid such errors. Professor Petros Iosifidis, a media policy expert at City University in London, cautioned against the premature deployment of such technology, emphasizing the risk of disseminating false information. He expressed surprise at Apple’s decision to release a product with such evident flaws, particularly given the company’s reputation for meticulous product development.

The incidents surrounding Apple Intelligence serve as a cautionary tale about the challenges of integrating AI into news dissemination. While the technology holds the potential to enhance information access and personalization, it also carries the risk of amplifying misinformation if not implemented responsibly. The cases involving the BBC, the New York Times, and numerous individual users underscore the need for rigorous testing, robust error-checking mechanisms, and transparent communication from tech companies developing AI-powered news features. Until these safeguards are in place, users must approach AI-generated summaries with caution, recognizing the potential for inaccuracies and the importance of verifying information from reliable sources. The future of AI in news delivery hinges on addressing these concerns and prioritizing accuracy and trustworthiness above all else.