Unmasking Deception: A Novel Approach to Fake News Detection using Advanced Word Embeddings and Adversarial Training

The proliferation of fake news poses a significant threat to the integrity of information ecosystems worldwide. Discerning fact from fiction in the digital age requires robust and sophisticated detection mechanisms. This research introduces a novel approach to fake news classification, leveraging cutting-edge word embedding techniques and adversarial training to enhance detection accuracy and resilience against manipulation. The study demonstrates significant improvements over existing state-of-the-art methods, offering a promising path toward more reliable fake news detection.

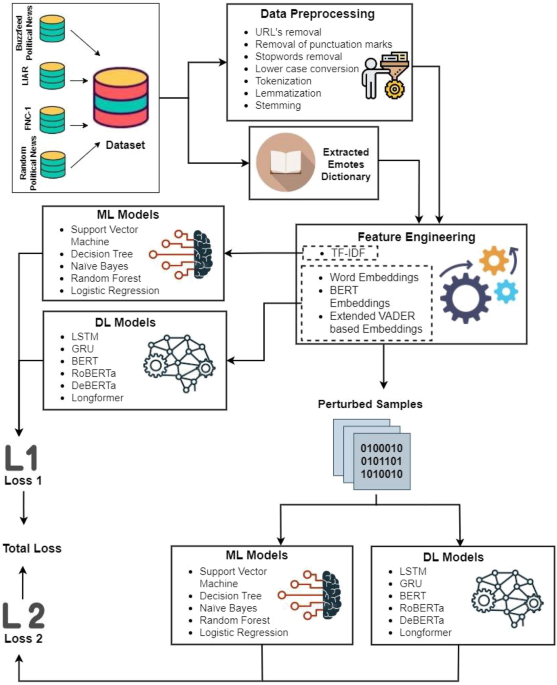

This study utilizes four publicly available datasets: Random Political News, BuzzFeed Political News, LIAR, and FNC-1. These datasets encompass a diverse range of political news articles, online posts, and reports, providing a comprehensive testing ground for the proposed fake news detection methodology. The datasets vary in size, ranging from several hundred to several thousand entries, and include labels indicating the veracity of each piece of content. This diverse collection allows for a robust evaluation of the model’s performance across different types of political content.

The core of this new approach lies in the strategic application of various word embedding techniques, including TF-IDF, Word2Vec, BERT embeddings, and Extended VADER. TF-IDF provides a foundation by highlighting terms frequently associated with fake news, such as “election fraud” or “misinformation.” Word2Vec captures semantic relationships between words, enabling the identification of misleading narratives. BERT embeddings, known for their contextual awareness, allow the model to understand the nuances of language use within news articles. Extended VADER, a sentiment analysis tool tailored for social media language, further enhances the model’s ability to discern deceptive or inflammatory content. The combination of these methods provides a multi-faceted representation of text, capturing both semantic and emotional cues indicative of fake news.

The experimental setup involved training multiple machine learning and deep learning models, including Support Vector Machines (SVM), Decision Trees, Logistic Regression, Naive Bayes, Random Forest, as well as transformer-based models like RoBERTa, DeBERTa, BERT, Longformer, LSTM, and GRU. Each model was trained on the four datasets using an 80:20 training-testing split. Notably, the training process was conducted in two phases: first with the original data, and then with adversarially perturbed samples. This adversarial training technique, implemented using the Fast Gradient Sign Method (FGSM), enhances model robustness by exposing it to slightly altered versions of the input data, forcing it to learn more generalizable features and resist manipulation.

The evaluation employed standard metrics – precision, recall, F1-score, and accuracy – to assess the models’ performance. Baseline results, before adversarial training, already demonstrated promising performance across all datasets, with transformer-based models generally outperforming traditional machine learning methods. For instance, on the Random Political News dataset, BERT achieved 92.43% accuracy, while Random Forest reached 90.7%. On the BuzzFeed dataset, BERT achieved 93.6% accuracy, surpassing Random Forest at 91.1%. Similar trends were observed for the LIAR and FNC-1 datasets, with BERT and other transformer models exhibiting superior performance.

Adversarial training further boosted the models’ resilience and performance. Across all datasets, models trained with perturbed samples exhibited improved metrics compared to their baseline counterparts. For example, on the Random Political News dataset, BERT’s accuracy increased from 92.43% to 93.43% after adversarial training. Similar improvements were observed on the BuzzFeed, LIAR, and FNC-1 datasets, highlighting the effectiveness of this technique in bolstering model robustness and generalizability. The most notable improvements were seen with transformer models, which consistently outperformed other methods after adversarial training.

A comparative analysis with existing fake news detection methods further underscores the efficacy of the proposed approach. The study’s BERT model, trained with adversarial samples, achieved the highest accuracy on three out of the four datasets, surpassing previous studies that utilized various techniques, including linguistic feature-based frameworks, clustering algorithms, deep ensemble models, and combinations of convolutional and deep neural networks. This superior performance highlights the potential of the combined approach of leveraging advanced word embeddings and adversarial training in addressing the complex challenge of fake news detection.

This research presents a compelling case for the efficacy of integrating advanced word embeddings and adversarial training in fake news detection. The rigorous evaluation across multiple datasets, including a comparison with existing methods, demonstrates the robustness and superior performance of the proposed approach. By capturing the subtle nuances of language and sentiment, and by enhancing model resilience against adversarial attacks, this study offers a valuable contribution to the ongoing fight against misinformation. This work paves the way for future research to further refine and expand upon these techniques, ultimately leading to more effective and reliable methods for identifying and combating fake news.