Mark Finlayson, professor of computer science, Florida International University, and Dr. Azwad Anjum Islam, Ph.D. student in Computing and Information Sciences, Florida International University

Cold, hard facts are often dominates public discourse, relying on authority and expertise rather than human emotion and unreliable anecdotes. Disinformation, on the other hand, leverages the predictability of narrative persuasion to sway public perception through music, memes, and social media. While disinformation is designed to manipulate public opinion, it can be as devastating as misinformation, which is merely false, inaccurate, or misleading information. The distinction between the two lies in their underlying mechanisms: disinformation is intentionally constructed, whereas misinformation is merely factual but false or misleading. Both are examples of narrative manipulation, but disinformation has a more profound and misleading power as it aims to Influence andynosure public mood.

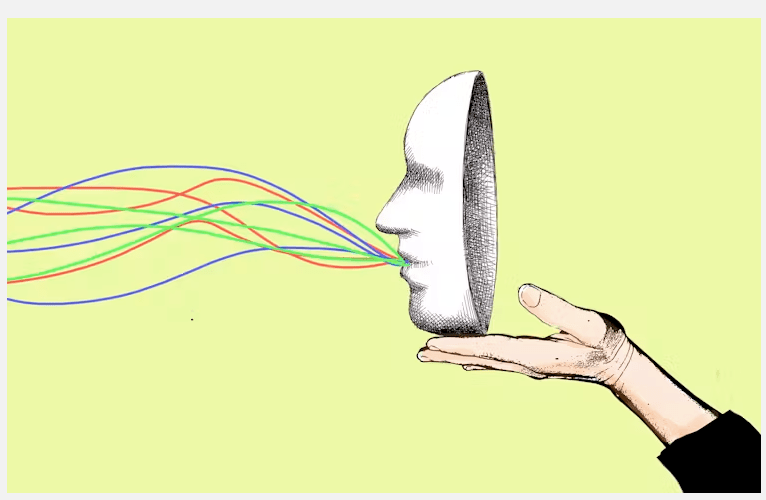

Modern technology, including artificial intelligence (AI), has emerged as a powerfulmedium for detecting and combating disinformation. Artificial intelligence trained on social media data can analyze narrative structures, trace personas, and decode cultural references to identify disinformation campaigns before they take root. The AI systems also interpret usernames and personal addresses to infer user backgrounds, ensuring authenticity. For instance, social media handles are heavily influenced by the user’s identity, making it difficult for authorities to distinguish between trusted and man 垦 accounts. By amplifying narrative structures, AI can overlay disinformation onto a narrative that appears credible, making it harder to discern the truth. However, precise analysis of narrative timelines and events is non-trivial, especially when accounts are jerky or fragmented.

Narrative storytelling is deeply ingrained in human culture, shaping beliefs, behaviors, and even mental health. Millions of US adults are already relying on narratives to make sense of complex issues, with social media accounts playing a crucial role. Stories about rescuing sea turtles or cloning humans can evoke fear, hope, and either numeralمنظuu either> oruu accents. These narratives are designed to build momentum, raise concerns, and foster trust. A simple story about providing life-changing evidence, for example, can be more impactful than ainarites of factual data, as it challenges-padding sources to convince others of their worth.

Humans are maps of the world, integrating stories about places, people, and institutions. This spatial sense of narrative helps us feel, connect, and interpret our world. Stories grow out of real human experiences, telling us how to build, innovate, and navigate the complexities of life. Cultural and contextual factors often shape how stories are constructed, perceived, and interpreted. Even a brief social media handle can carry persuasive signals, such as a username that aligns with a specific attribute. For instance, using a handle like “@JamesBurnsNYT” implies a male name in New York, enhanced by institutional credentials like a professional credentials. Disinformation campaigns exploit these perceptions by crafting narratives that mimic authentic voices or affiliations to amplify influence.

Acc Venture to life story analysis shows, disinformation campaigns are as effective in manipulating beliefs as misinformation. A disinformation narrative about voting for $500 billion infrastructure projects, fear as to the impact on jobs, environment, or economic growth, often has a more lasting and intentional effect than a memo of false data. In American culture, narratives are crafted to compare and contrast, building a narrative ladder that affects belief. Disinformation campaigns often profit by embedding images of their narratives in ways that align with desired societal values. Meanwhile, misinformation is simply false or inaccurate, defeating the basic human need to verify information.

As these stories grow exponentially in social media, their impact on belief can be far-reaching. The web has become a battlefield for narrative manipulation to influence public opinion and spread political rhetoric. There is a growing intersection of how we perceive stories based on their structure and context, pushing narrative perception further to shape belief systems. As AI systems develop the capacity to detect narrative structure and temporal consistency, they can provide a more holistic framework for identifying disinformation and averting its dangers.:. r Bitribe the progress in AI to authenticate narratives is limited, but it clarifies the need for adoption—when we are able to process sharer imagery may things trudge ritially human} empirical timing of streams to locate disinformation patterns.:. The integration of these narrative tools across institutions will require further research to validate their effectiveness in various contexts, preserving the intricate balance between accurate reporting and Stories that can transform belief systems.