Resurgence of Russian Disinformation Campaign Exploits Bluesky’s Nascent Platform

A sophisticated Russian disinformation network, previously identified and exposed on Twitter (now known as X), has resurfaced on the decentralized social media platform, Bluesky, raising concerns about the vulnerability of the platform and its users to manipulative tactics. The network, dubbed "Matryoshka" by the independent watchdog group Bot Blocker, employs AI-generated deepfakes to impersonate academics and experts, spreading fabricated narratives aimed at undermining trust in Western institutions and promoting pro-Kremlin viewpoints. This latest iteration of the campaign, mirroring a similar effort uncovered earlier this year, leverages the relative lack of robust content moderation and fact-checking mechanisms on Bluesky, effectively exploiting the platform’s decentralized structure to disseminate deceptive content.

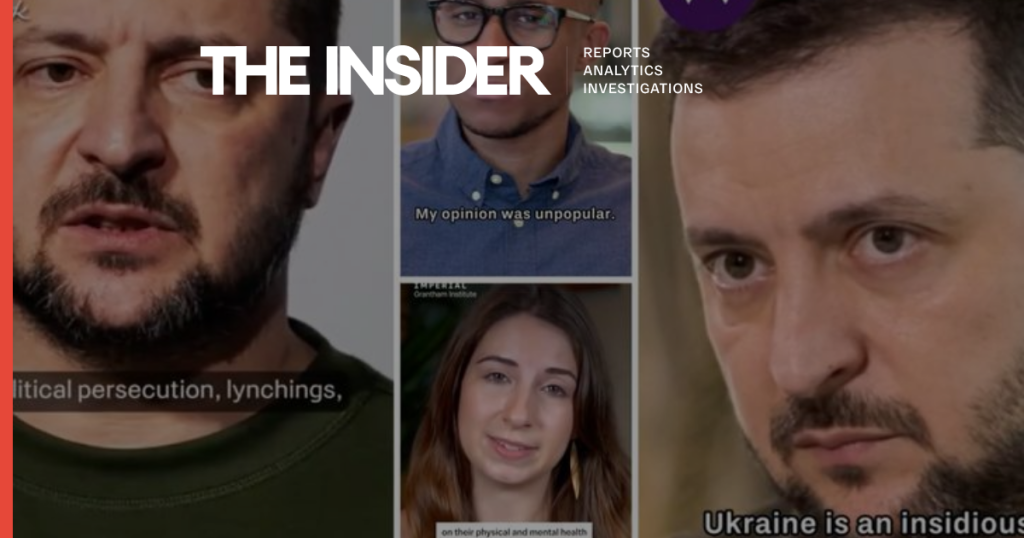

The campaign’s reliance on AI-generated deepfakes adds a layer of complexity to the disinformation landscape. By cloning the voices and likenesses of prominent figures, particularly academics affiliated with prestigious universities, the network aims to lend an air of credibility to its fabricated narratives. Earlier investigations, supported by expert analysis from the University of Bristol, confirmed the use of AI voice cloning technology in videos featuring renowned historians, presenting false statements attributed to these respected individuals. This tactic not only misrepresents the views of the individuals impersonated but also erodes public trust in academic expertise and institutions of higher learning.

The shift from X to Bluesky underscores the dynamic nature of online disinformation campaigns and how they adapt to exploit vulnerabilities in emerging platforms. While X has implemented measures to mitigate the spread of such content, Bluesky, still in its relative infancy, lacks comparable safeguards. This disparity makes Bluesky a fertile ground for disinformation operations seeking to reach new audiences and circumvent the more established defenses of larger platforms. According to Bot Blocker, this susceptibility stems from Bluesky’s decentralized nature, making it more difficult to implement comprehensive content moderation and fact-checking procedures.

The timing of this campaign coincides with a significant surge in Bluesky’s user base, driven by dissatisfaction with recent changes on X following Elon Musk’s acquisition and the aftermath of the contentious 2024 US presidential election. Donald Trump’s victory has further fueled this migration, as users seek alternative platforms perceived to be less restrictive of certain viewpoints. This influx of new users, combined with the platform’s nascent moderation capabilities, presents a ripe opportunity for disinformation campaigns to gain traction and potentially sway public opinion. While Bluesky boasts a significantly smaller user base compared to X (25 million versus 335 million), the rapid growth and the specific demographics of migrating users create a targeted environment for influencing online discussions and potentially amplifying politically charged messages.

The implications of this disinformation campaign extend beyond the immediate spread of false narratives. The erosion of trust in reputable sources, the manipulation of public discourse through deepfake technology, and the exploitation of emerging platforms represent a significant challenge to the integrity of online information. The relative ease with which malicious actors can leverage AI-generated content to impersonate credible figures underscores the urgent need for robust detection and mitigation strategies.

The ongoing Matryoshka campaign serves as a stark reminder of the evolving nature of online disinformation and the challenges it poses to platforms, users, and the broader information ecosystem. The ability of these campaigns to adapt to changing platform landscapes and exploit vulnerabilities in emerging social media sites necessitates a continuous effort to develop and implement effective countermeasures. As Bluesky grapples with its rapid growth, addressing the issue of disinformation and implementing comprehensive content moderation strategies will be crucial to safeguarding the platform’s integrity and protecting its users from manipulative tactics. A failure to do so could see Bluesky become a haven for disinformation campaigns, further exacerbating the already complex challenge of combating online misinformation. The need for vigilance, critical thinking, and improved platform accountability remains paramount in the face of this evolving threat.