The Dawn of the Age of AI-Generated Fakes: Navigating a World of Synthetic Content

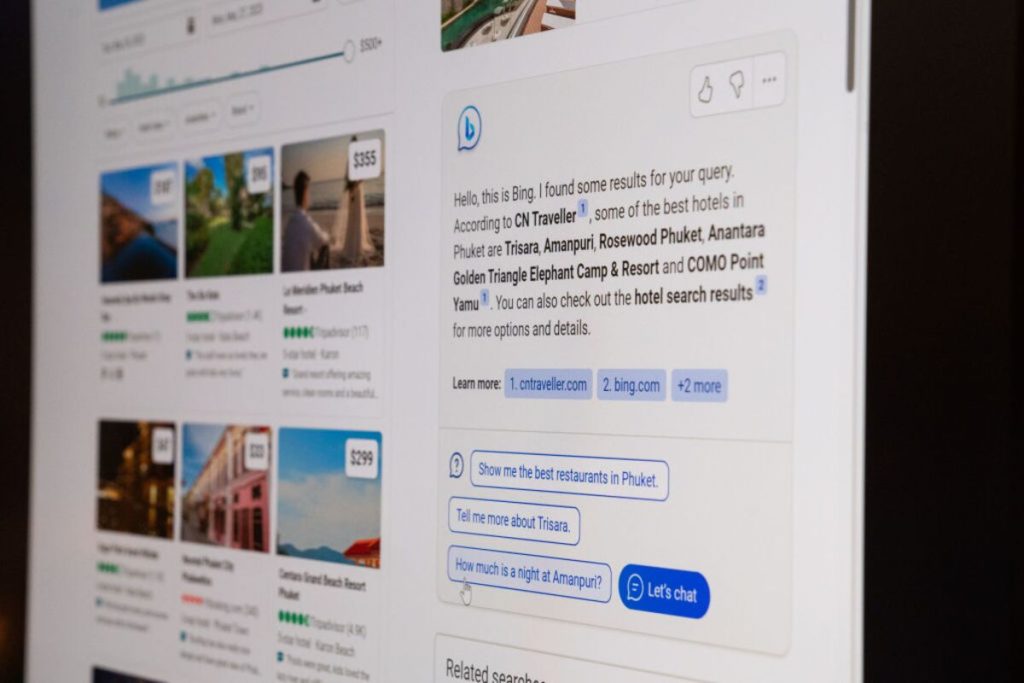

The rapid advancement of artificial intelligence (AI) has ushered in a new era of unprecedented capabilities, but it has also opened Pandora’s box of sophisticated fakery. Large language models (LLMs) like GPT-4 can now generate human-quality text, making it increasingly difficult to distinguish between authentic writing and AI-generated content. This prowess extends beyond text, with readily available tools capable of producing realistic fake photos and images, and emerging technologies poised to deliver convincing voice simulations. The implications are profound, affecting everything from academic integrity to the reliability of online information. Evidence suggesting the use of AI in academic papers signals a potential crisis of authenticity, raising the alarm that the floodgates of AI-generated fakery have already opened.

The proliferation of AI-generated fakes is not a mere possibility; it’s an inevitability. As these technologies become more accessible and sophisticated, the volume of synthetic content online will surge. This poses a significant challenge to consumers of information, who will find it increasingly difficult to discern truth from falsehood. The ease with which convincing fakes can be created undermines trust in online sources and creates a breeding ground for misinformation and disinformation. The potential consequences are far-reaching, impacting public discourse, political campaigns, and even personal relationships.

The academic world is already grappling with the implications of AI-generated text. The detection of potential GPT-4 usage in academic papers highlights the vulnerability of the peer-review process and the potential erosion of academic integrity. If even professors, the gatekeepers of knowledge, are resorting to AI assistance to generate research, it raises serious questions about the validity of scholarly work and the future of academic publishing. This trend could lead to a decline in trust in academic research and a devaluation of genuine scholarly contributions.

The challenge extends beyond academia. The proliferation of fake news and manipulated media has already demonstrated the potential for AI-generated content to sow discord and manipulate public opinion. As AI-generated fakes become more sophisticated and readily available, the potential for malicious actors to exploit these technologies for nefarious purposes increases. This could lead to an erosion of trust in institutions, the spread of conspiracy theories, and even the incitement of violence.

However, the future is not necessarily bleak. While the rise of AI-generated fakes presents a formidable challenge, it is not insurmountable. The key lies in adapting to this new reality and developing strategies to mitigate the risks. This requires a multi-pronged approach involving both consumers and producers of online content. Consumers need to develop critical thinking skills and adopt a more skeptical approach to online information. This includes verifying information from multiple sources, being aware of the potential for manipulation, and understanding the limitations of online platforms.

Producers of online content, including social media platforms, news organizations, and academic institutions, also have a crucial role to play. They need to invest in technologies and strategies to detect and flag AI-generated content. This includes developing more sophisticated algorithms for identifying fake images and videos, as well as implementing robust verification processes for online information. Furthermore, promoting media literacy and educating the public about the potential for AI-generated fakes is essential. By working together, consumers and producers can create a more resilient online ecosystem that is less susceptible to manipulation and disinformation. This collaborative effort will be crucial in navigating the age of AI-generated fakes and preserving the integrity of online information. The challenge is significant, but with proactive measures and a commitment to truth and transparency, the potential negative impacts of AI-generated fakes can be mitigated. The future of online information depends on it.