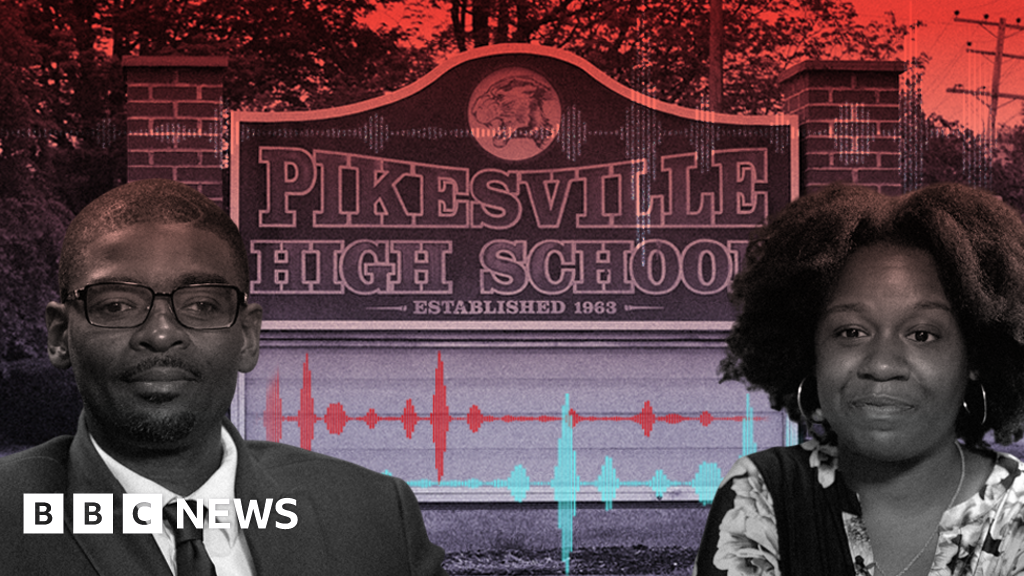

In the developing landscape of misinformation, a recent incident in Pikesville involving a fake audio clip has raised concerns about the power and impact of artificial intelligence on public perception. The clip, which falsely portrayed a local school principal making disparaging remarks about black students, capitalized on community sentiments and personal experiences of racism, leading many to initially believe its authenticity. Notably, while users added images and the clip was widely shared on video platforms, the actual content was audio-only, lacking visual cues that typically reveal AI manipulation. This deliberate presentation led to a suspension of disbelief among listeners, many of whom were drawn in by the vivid details and jargon present in the clip.

The audio manipulation captured the likeness of the principal’s voice to an extent that was convincing on first listen, despite having clear edits between sentences and a relatively monotonous tone. Artificial intelligence technology has reached a level where it can utilize existing audio clips from well-known personalities to craft narratives that sound credible, even if they are entirely fabricated. This method of misinformation is particularly insidious, as it can evoke deep-seated emotional responses, drawing connections to personal experiences of the listeners. Mr. Malone, a local resident, described how the fabricated comments resonated with his own encounters of racial discrimination, illuminating the emotional triggers embedded within the content.

Months after the incident, the repercussions linger in the aftermath of the fake audio. The principal involved, Mr. Eiswert, has since left his position, which underscores the serious ramifications for individuals falsely implicated in misinformation campaigns. While some community members have since acknowledged the clip as fabricated, the damage was already done; the narrative, once accepted as real, had already taken root in the minds of listeners. Community sentiment remains charged, with lingering anger and fear sparked by the inflammatory nature of the comments that were attributed to the principal.

The incident shed light on the volatile intersection of technology and social realities, particularly in how misinformation can exploit existing societal tensions. One resident, Sharon, reflected on the outrage that swept through the neighborhood, emphasizing that the allegations—regardless of their veracity—had not only incited fear but also disrupted the sense of community cohesion. “When people say things like that,” she remarked, “other people join in,” indicating how harmful narratives can propagate and create an environment thick with mistrust and apprehension.

Despite an eventual realization of the clip’s AI origins, many like Sharon continue to grapple with the emotions stirred by the incident. The experience left residents questioning the reliability of information and the motives behind such manipulations. Understanding that the clip was generated by artificial intelligence did little to assuage their anger, as the initial impact lingered in the community’s consciousness. This reaction serves as a cautionary tale about the potential for technology to incite fear and division among people, often amplifying existing biases and social fractures.

As misinformation becomes increasingly sophisticated, the Pikesville incident serves as a poignant reminder of the imperative to maintain critical engagement with media and the narratives that shape public discourse. It calls for heightened awareness regarding the tools of AI manipulation and the emotional gravity of fabricated statements, especially within communities already fraught with tension. Ultimately, the challenge lies not only in discerning truth from deception but also in healing the rifts that such misinformation can cause within neighborhoods, ensuring that technology does not further exacerbate societal divisions.