The Looming Threat of AI-Generated Disinformation in the 2024 Elections and Beyond

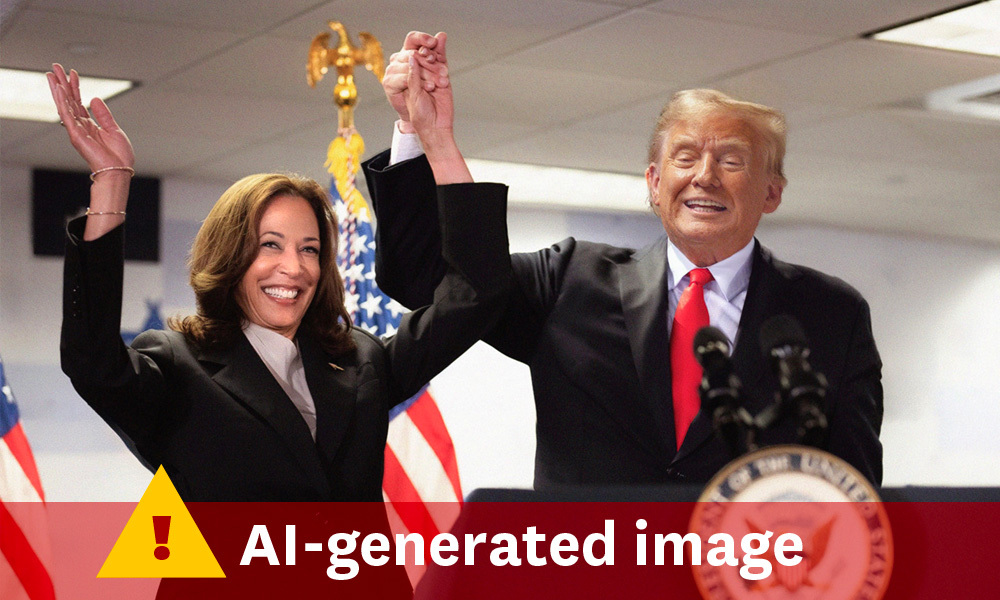

The 2024 election cycle is rapidly approaching, and with it comes a new and potent threat to the integrity of democratic processes: artificial intelligence-generated disinformation. The ability of AI to fabricate realistic images, videos, and audio recordings has reached unprecedented levels of sophistication, raising serious concerns about the potential for manipulation and erosion of public trust. The incident involving a robocall mimicking President Biden’s voice to discourage New Hampshire voters serves as a stark reminder of this emerging danger. This technology, while holding immense potential for positive applications, presents a significant challenge to the foundations of informed democratic participation.

The dangers of AI-generated disinformation are multifaceted. It can be used to spread false narratives, manipulate public opinion, and sow discord among voters. This can lead to decreased trust in democratic institutions, increased polarization, and even create opportunities for foreign interference in elections. Mindy Romero, director of the Center for Inclusive Democracy at the USC Price School of Public Policy, emphasizes the critical role of informed citizenry in a functioning democracy. The erosion of trust caused by AI-generated disinformation undermines this foundation, potentially leading to instability and voter apathy.

Experts are sounding the alarm and offering strategies to combat this emerging threat. A key first step is cultivating a healthy skepticism towards all political news. Sensationalized content, emotionally charged narratives, and information that seems too perfect or too damning should all raise red flags. Confirmation bias, the tendency to accept information that aligns with pre-existing beliefs, makes individuals particularly vulnerable to disinformation campaigns. It’s crucial to verify information across multiple reputable sources before accepting it as factual. Google searches, fact-checking websites, and consulting established news organizations can help determine the veracity of information encountered online.

Furthermore, relying on trusted news sources is paramount. Distinguishing between news reporting and opinion pieces is essential for critical consumption of information. Recognizing the source’s reputation, editorial standards, and potential biases helps in evaluating the credibility of the information presented. While personal vigilance is crucial, experts acknowledge that combating disinformation requires a collective effort. Policymakers have a critical role to play in establishing regulations and guidelines to mitigate the impact of AI-generated disinformation on democratic processes.

International examples, such as the European Union’s Digital Services Act, offer valuable insights for U.S. policymakers. This act mandates risk assessments by large online platforms, requiring them to identify and mitigate potential societal harms posed by their products, including risks to elections and democratic processes. Independent audits and data access for researchers further enhance accountability and transparency. These measures offer a framework for regulating AI technology while protecting fundamental democratic values.

Within the United States, several legislative efforts are underway to address the challenges posed by AI-generated disinformation. California, for instance, has seen proposals to mandate the labeling of AI-generated content. This would require digital media created by generative AI to include information about its origin, creation date, and creator. Social media platforms would then be obligated to use this data to flag AI-generated content, providing users with greater awareness and context. Similar efforts at the federal level are focused on regulating AI in political advertisements and providing guidance to local election officials on managing the impact of AI on election administration, cybersecurity, and disinformation. While the federal government has issued general AI risk management guidance, experts highlight the need for specific guidelines tailored to the use of AI in elections.

The development and deployment of AI technology are rapidly outpacing the existing regulatory frameworks. The potential for misuse of this technology in the electoral process poses a significant threat to democratic integrity. The need for robust policy interventions, public awareness campaigns, and ongoing research is undeniable. Combating the spread of AI-generated disinformation requires a concerted effort from individuals, technology companies, and policymakers alike. The future of democratic discourse hinges on the ability to navigate this complex landscape and safeguard against the manipulative potential of this powerful technology. Only through proactive measures and informed engagement can we ensure that AI serves to enhance, rather than undermine, the democratic process. The stakes are high, and the time for action is now.