AI-Generated Disinformation: The Rise of Deepfake News Anchors

The Taiwanese presidential election witnessed a new form of disinformation: AI-generated news anchors. A video featuring a disconcertingly artificial presenter criticized the outgoing president, Tsai Ing-wen, using derogatory language and partisan rhetoric. This incident highlights the growing use of AI-generated avatars to spread propaganda and influence public opinion. While the video’s creators remain unknown, its purpose was clear: to sow distrust in politicians advocating for Taiwan’s independence from China. This represents a disturbing trend in AI-driven disinformation campaigns, where realistic-looking avatars are deployed to deliver politically charged messages.

These AI-generated news anchors, while not perfect replications of humans, are designed to exploit the fast-paced nature of social media. Users scrolling through platforms like X (formerly Twitter) or TikTok are less likely to scrutinize minor visual or auditory inconsistencies on smaller screens. This allows even crudely made deepfakes to leave an impression, particularly on those not actively seeking to verify information. The accessibility of AI technology fuels this proliferation, making it easier for state-backed actors and other groups to create and disseminate these manipulative videos.

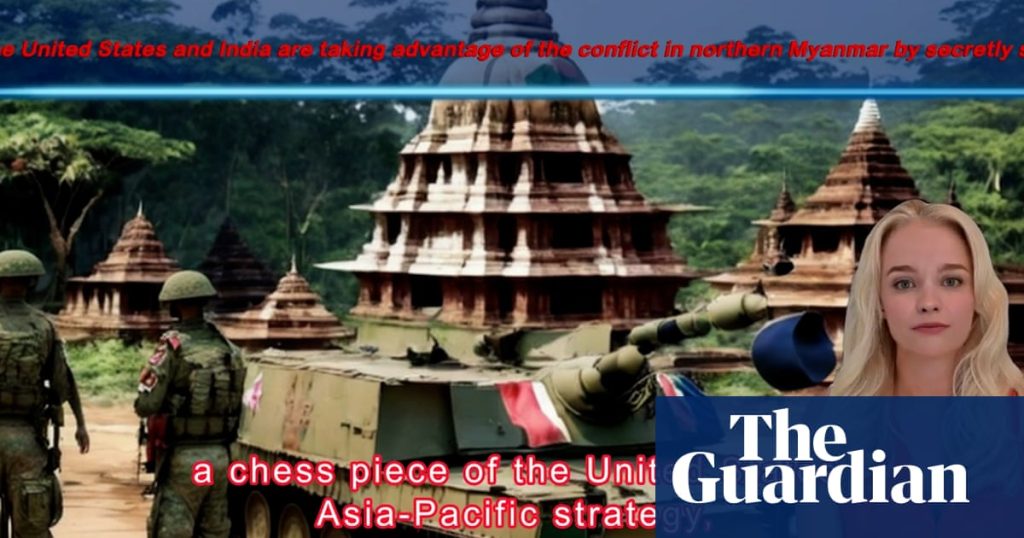

China has been at the forefront of this technology, experimenting with AI news anchors since 2018. While its initial attempts saw limited success domestically, China has increasingly used AI-generated presenters in disinformation campaigns targeting international audiences. Examples include the fabrication of a fictitious news outlet, Wolf News, and the spread of unsubstantiated claims about Taiwanese political candidates. These campaigns often utilize platforms like Facebook and X, leveraging bot accounts to amplify the reach of the deepfake videos.

These AI-generated videos utilize readily available tools, making them relatively easy to produce and disseminate. Software like CapCut, developed by the Chinese company ByteDance, offers templates for creating news anchor avatars, allowing users to easily adapt and produce content at scale. This ease of production lowers the barrier to entry for malicious actors seeking to spread disinformation. This trend extends beyond mere political propaganda, with some groups using AI avatars to mimic influencers and comment on various news stories, further blurring the lines between authentic and fabricated content.

While the overall quality of many of these deepfake videos remains unconvincing, with robotic voices and unnatural movements, the increasing volume and accessibility of these tools raise concerns. Even if easily detectable by trained professionals, these videos can still deceive casual viewers, especially when amplified through social media algorithms. Furthermore, the technology is constantly evolving, and future iterations will likely become more sophisticated and harder to distinguish from real footage. The use of AI-generated avatars has already been observed in several countries, from Iran to Ukraine, highlighting the global nature of this evolving disinformation tactic. Even the use of AI avatars by terrorist groups, like the Islamic State, has been documented, demonstrating the potential for this technology to be exploited for various malicious purposes.

Although the current impact of these AI-generated news anchors appears limited, experts warn against complacency. The rapid advancement of AI technology suggests that these videos will become increasingly realistic and persuasive. A more concerning scenario is the potential manipulation of real news anchors’ footage, a tactic considered more likely and potentially more impactful than fully synthetic creations. The focus now shifts to developing effective detection methods and raising public awareness to mitigate the influence of these sophisticated disinformation campaigns. The battle against AI-generated misinformation is just beginning, and vigilance is crucial to navigate this evolving landscape.