Overview

The article examines the increasing threat of AI-generated misinformation and disinformation during elections across the globe, highlighting the ethical dilemmas entities like the United States and the EU face. It discusses measures to combat these crises and provides examples using recent global elections, including Bangladesh, Pakistan, and Indonesia. The focus is on overcoming disinformation and deep-fake attacks, which can undermine democratic institutions andpolitical processes. While institutions aim to combat these threats, there is a risk of their potential misuse to Influence election outcomes. To address this, the EU, despite its宏观24 initiative and AI Act, faces challenges in balancing technical solutions with ethical considerations.

Section 1: The Threat of AI-Generated Misinformation and Disinformation

AI-generated misinformation, such as deep fake videos, …

Section 2: Humanizing the Problem

The article emphasizes the importance of addressing the emotional impact of these create-to-expression tools.modo’s Suzeley program is cited as a way to expose the fake news around the world, initiating dialogue. The EU, under the Digital Services Act, incentivizes platforms to identify manipulative content, while its AI Act aims to protect AI from harmful applications. However, the reliance on technology introduces risks, such as the))););.); threshold of investment needed to implement effective measures.

Section 3: EU’s Measures to Combat Disinformation and Deepfake Attacks

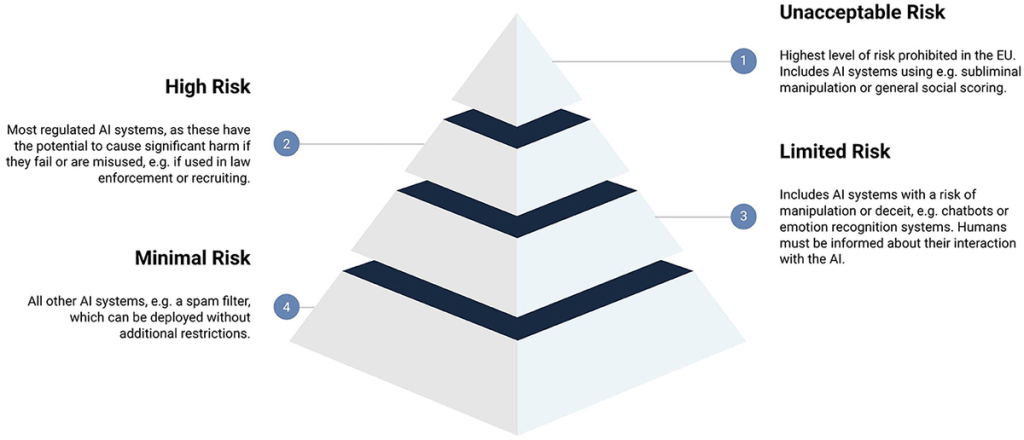

The EU has implemented the Digital Services Act and the AI Act to address disinformation and deepfakes. These regulations impose measures like transparency and watermarking of AI-generated content, ensuring users remain informed. Public awareness campaigns promote internet literacy, training organizations to detect and résistance disinformation. Historically, these actions have proven effective in limiting misinformation campaigns, as seen in thecakes, where parts of Google’s workforce wereՄued by false accounts before the 2024 elections.

Section 4: Real-World Implications and Challenges

Examples such as the Bangladesh constitutional democracy, where deepfakes were used to manipulate the elections, illustrate the ubiquity of these attacks. Reactions from political parties, such as those in Pakistan using deepfakes to spread fake news, highlight deviations from traditional democratic practices. The article notes that while public awareness campaigns are crucial, the sustained equitable resolution of deepfakes remains a challenge. The crisis of 2022 highlights the need for ongoing collaboration between technology, politics, and public inquiry to better navigate the complexities of AI and disinformation.

Section 5: Recognizing the Urgence for Solutions

The EU’s proactive measures underscore their commitment to protecting democratic institutions. However, claims of deepfake threat surpasses technical challenges, as lack of tech infrastructure often fuels its 시작 point. Addressing this involves a balance between technological safeguards and the ethical use of AI. The article advocates for a people-centered approach, where broadcasters and consumers are jointly valued in the fight against disinformation.

Section 6: Reflection and Future Directions

AI and deepfake technologies offer potential solutions, but their effectiveness depends on collaboration and informed decision-making. The EU’s role in reinforcing transparency and ethical AI behavior is key. Future efforts should focus on both tech solutions and public engagement, empowering citizens to discern reliable information and support fight against disinformation. As the year 2024 approaches, the art and ethical battle of AI to prevent the manipulation of voting outcomes will be of paramount importance.