Steve Harvey Death Hoax Sparks AI Concerns and Online Fury

The internet was ablaze this week with the shocking, yet ultimately false, news of comedian and television host Steve Harvey’s death. An article titled “Steve Harvey Passed Away Today: Remembering The Legacy Of A Comedy Legend” appeared on Trend Cast News and subsequently aggregated on Newsbreak, sending fans into a frenzy of grief and confusion. The article, however, carried a future publish date of December 19, 2024, immediately raising suspicions and pointing towards a potential AI mishap. This incident underscores the growing concerns surrounding the proliferation of AI-generated content and its potential to spread misinformation at an alarming rate.

The premature publication of the article, coupled with the future date, strongly suggests an error within an automated publishing system, possibly utilizing AI technology. While the exact mechanism behind the error remains unclear, the incident fuels the ongoing debate about the responsible implementation of artificial intelligence in content creation and dissemination. The potential for such technology to generate and spread false information, particularly sensitive news like celebrity deaths, necessitates increased scrutiny and development of safeguards to prevent similar incidents in the future. The rapid spread of the false news highlights the vulnerability of online platforms to misinformation and the challenges posed by automated content management systems.

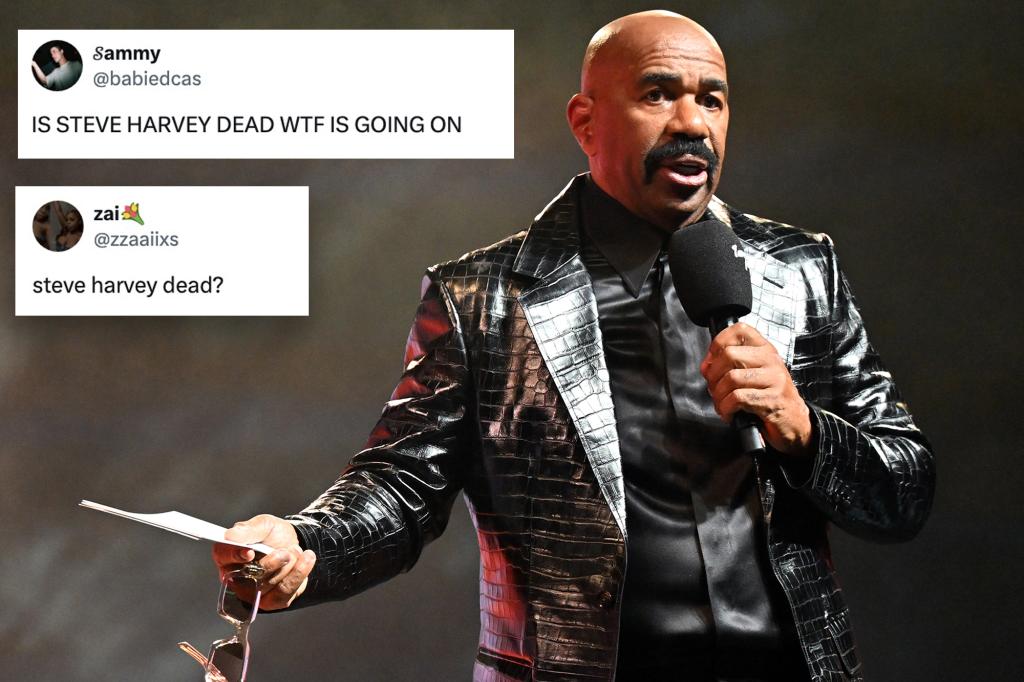

Harvey himself, seemingly unfazed by the online chaos, posted an inspirational message on X (formerly Twitter): “When you feel like giving up.. DON’T When you thinking bout giving up.. DON’T When it look like you ain’t gone make it.. KEEP GOING.” While he didn’t directly address the death hoax, the timing of the post amidst the swirling rumors led many to interpret it as a subtle response. The incident sparked a wave of reactions on social media, ranging from expressions of shock and disbelief to anger and frustration at the spread of false information. Many users questioned the origin of the rumor and criticized the platforms that allowed it to circulate.

The online community’s response was a mix of confusion, concern, and outrage. Some users initially believed the news, expressing their sadness and condolences. Others quickly identified the report as a hoax, warning others not to fall for the misinformation. The incident sparked a wider discussion about the responsibility of news aggregators and social media platforms to verify information before publishing or sharing it. Many users blamed Newsbreak for allowing the article to appear on their platform, while others called on social media companies to implement stricter measures against the spread of false information. The incident also fueled criticisms against the reliance on AI in content creation and the potential risks associated with its unchecked deployment.

This is not the first time Harvey has been the target of a death hoax. In October, rumors circulated about his death in a car crash, a recurring false narrative that has plagued the comedian in recent years. Harvey has previously addressed these hoaxes with humor, sharing a meme of himself looking at his phone with the caption, “Me seeing that Rip Harvey is trending.” The repeated nature of these incidents highlights the persistent challenge of combating misinformation online and the ease with which false narratives can gain traction. This latest incident, however, adds a new dimension to the issue, implicating AI technology in the creation and dissemination of the false news.

The Steve Harvey death hoax serves as a cautionary tale about the dangers of unverified information in the digital age, particularly when amplified by AI technologies. It underscores the need for increased vigilance among online users, encouraging critical evaluation of news sources and information encountered on the internet. Furthermore, it highlights the responsibility of news outlets and social media platforms to implement robust fact-checking mechanisms and to address the potential for AI-driven misinformation campaigns. The incident also raises questions about the ethical implications of using AI in content creation and distribution, urging for greater transparency and accountability in the development and deployment of these technologies. The incident serves as a reminder that in the age of AI, misinformation can spread with unprecedented speed and reach, demanding increased media literacy and responsible online behavior from everyone.